Synopsis

Data quality is critical to the correct operation of an organisation’s systems. In most countries, there is a legal obligation to ensure that the quality of data in systems, particularly financial systems, is maintained at a high level.

For instance, the Australian Prudential Regulation Authority’s [APRA] Prudential Practice Guide CPG235 ‘Managing Data Risk’ Clause 51 states:

Data validation is the assessment of the data against business rules to determine its fitness for use prior to further processing. It constitutes a key set of controls for ensuring that data meets quality requirements.

There are times in the life of an organisation when lack of data quality, or lack of evidence of data quality, can rise to the level of an existential threat, for instance when:

- Failures in data quality causes harm to customers, especially if it becomes public knowledge.

- Regulators issue enforceable actions.

- Engaging in M&A activity.

- Poor data quality inhibits the organisation’s ability to respond in a timely way to compelling market forces.

So, what is data quality?

Data quality is the measure of the extent to which the data complies with the business rules:

- that determine its validity, if the data is acquired from external sources; or,

- that determine its correctness, if the data is derived internally.

Ergo, you cannot measure data quality if you do not have the rules readily available to use as the yardstick; you cannot have ‘master data’ if you do not also have ‘master rules’. Yet, no organisation that we are aware of has implemented the concept of ‘master rules’ in any practical sense.

Sooner or later, your organisation will confront a period when the risks surrounding data integrity pose a significant threat. At that point, you will have no choice but to consider implementing master rules. This article describes how.

The Backstory

The article “Requirements and the Beast of Complexity”[1] by this author was published in Modern Analyst ten years ago, with its relevance confirmed by more than 40,000 downloads since.

The Beast article targeted requirements specifications as a significant weak link in the traditional systems development life-cycle. It presented a case for a new approach to requirements gathering and specification that is based on analysis of business policy to identify core business entities and the rules that govern their respective data state changes. These rules were to then be captured and described as ‘decision models’, to be implemented as executables in front-line systems.

This approach was proposed in contrast to the then (and still) popular data and process centric requirements approaches, which generally position rules as dependents of either data or process, rather than as first order requirements in their own right. In fact, the Beast article claimed that rules are superior requirements to both data and process, because we can derive data and process requirements from the rules, but not the reverse.

For the sake of clarity, a rule in this context is an implementation of business policy that also inherently defines business data quality – it either validates the data if it is inbound; or it manufactures the data if it is derived. In either case, rules are the essence of data quality. And for the sake of clarity, for a rule to implement policy, it must be executable – a rule that is not executable is a description of a rule, not an implementation of a rule; a description cannot be the ‘source of truth’ if the implementation does not concur.

In the decade since the Beast article was published, our business has been deeply involved in audit, remediation, and migration of legacy systems, with the scope of these engagements spanning pensions (millions of member accounts), insurance (millions of policies), and payroll (dozens of tier one corporate and government payrolls).

Our audit, remediation, and migration activity spans most of the major technology platforms that have been relevant over the past 50 years, including many that pre-date relational databases. With respect to these platforms, we have recalculated and remediated individual accounts going back as far as 35years.

This activity has provided a useful look into the rear-view mirror that has confirmed the original thesis of the Beast article, and at the same time it has exposed a dearth of effective rules management in most organisations today.

Master Data Management

Master Data is a concept as old as computing itself[2]. It can be somewhat loosely described as an inventory of computer data. A more formal definition is proposed in a current Gartner paper as follows:

“Master data management (MDM) is a technology-enabled discipline in which business and IT work together to ensure the uniformity, accuracy, stewardship, semantic consistency and accountability of the enterprise’s official shared master data assets. Master data is the consistent and uniform set of identifiers and extended attributes that describes the core entities of the enterprise including customers, prospects, citizens, suppliers, sites, hierarchies and chart of accounts.”[3]

In practice, authoritative categorisation and management of data as anticipated by Master Data Management is usually limited to the Cobol copybooks of earlier systems, or the Data Definition Language [DDL] of relational databases and similar systems. In either case, we can extract both the data definitions and the data itself in hours using fully automated means. In the 50 year span of the dozens of systems addressed by our engagements, we have never encountered reliable and accurate ‘master data’ descriptions outside of these original system derived sources.

But regardless of the efficacy or otherwise of any attempt at ‘master data management’, there is an elephant in the room – even a complete and authoritive “consistent and uniform set of identifiers and extended attributes that describes the core entities of the enterprise” as proposed by Gartner tells us nothing about how the data complies with the business policies that govern it. We get nothing except its name, datatype, and relative position in a classification system. Usually, even an authoritative description or definition of the data cannot be attempted without first principles analysis of system artefacts, usually meaning code.

For instance, a project that we have just completed generated an ontology from multiple disparate source systems – which ended up with both ‘last name’ and ‘surname’ as attributes of a person. Without looking at the underlying code, there is no way to differentiate between these two attributes, which sometimes had different values in them for the same person (hint, there was a difference in usage!)

Master Rules Management

In an echo from the Beast article, we again confront the issue that the rules that govern the process of capturing and validating inbound data, and which formally derive all other data, are in fact the exclusive arbiters of the meaning of the data. Without understanding these rules, the data remains opaque. In short, Master Data Management is implausible without an equally robust ‘Master Rules Management’ – and Master Rules Management is not a concept that we have ever found in practice.

Whenever we are doing audit, remediation, and/or migration, it is the rules that we need to find that will inform us about the data in general, and about its quality in particular. Our view is that data quality is actually measured by how well the data conforms to its rules – validation rules for input data, and calculation rules for derived data. It is the rules analysis that takes the vast majority of time and effort. When we are merely looking at data in isolation, there is usually a starting point that can be derived automatically by reading the DDL, copybooks, or other file system definitions – but not so with rules; it is first principles all the way. All the data can tell us is that there must be some rules somewhere!

We are regularly asked if we can auto-extract the rules in the same way we do data?

The answer is no, even though we do have tools to assist this process. But more importantly, full auto extraction of rules would not solve the issue, not even close!

This is because effective rules management, as described by the Beast article, was not practiced at the inception of the systems. As a result, the full body of rules has grown organically and without formal structure over a long period of time – like weeds rather than a garden, usually widely dispersed across multiple systems and manual processes. The multiplicity of rules often means that the same rule intent is implemented in different, and even conflicting ways. Rules are often applied piecemeal across multiple systems in an order that may be unclear. The net effect is that the rules that we extract from source systems are regularly shown to be denormalised, conflicted, incomplete, and sometimes simply wrong.

Furthermore, there is usually little or no provenance for the rules. The background, the intent, the justification, and the approval of rules is rarely documented, and even the verbal story-telling gets lost over time. Definitions of correctness become elusive. This is the key issue highlighted by the Beast article: the rules were never recognised as first order requirements in the first place, and so never went through a defined ‘rules development life cycle’. Simply put, they do not exist in a formal, ordered way, and their actual existence must now be surmised transitively by looking for their effect on the data.

Consequently, even if we were to automatically extract the rules, they would still need to go through an intense process of analysis, normalisation, and refactoring, followed by testing, and eventually formal (re)approval. That is, we need to backfill the ‘rules development life cycle’ to elevate the rules to the level required for Master Rules use, and this cannot be achieved with an automated extraction because the required rules meta data simply does not exist, and in all probability, never did.

Getting Started

With this in mind, we approach rules (re)discovery from a first principles basis, and we recollate the rules into a common, business-wide, fully normalised rules repository. First principles sources include published market documents (including product disclosure statements and similar) and their internal policy document counterparts, supported by verbal recollections from SME’s; system documentation, if any; and of course, code inspections, both automated and manual.

The key to efficiency is to be able to quickly and easily capture, normalise, refactor, and test the rules, which is where we do apply automation at scale. Assuming that the ‘common, business-wide, fully normalised rules repository’ is also fully executable; and assuming that we have already auto-extracted the data; then we can add simple design patterns that make rules discovery and clean-up an easily repeatable, fast and rigorous daily cycle – that is, data can be extracted and rules developed, applied, and verified, all on a daily basis, with real-time progress reporting to ensure appropriate management oversight.

The processes required to achieve Master Rules are significantly more complex to do in arrears (which is always the case, given the weed-like growth of rules described above), and why we have audit, remediation, and migration so clearly in focus; each of these processes has an important role to play in back-filling the rules meta data that is required for effective Master Rules Management. Simply calling for a business analyst to ‘go and document the rules’ won’t cut it if you are under existential threat.

The Scope of Rules

A word on the scope of rules. The actual rules that implement the source system intent, as ordained by business policies (including every validation, every calculation, every adjudication), are merely the starting point and provide only the baseline rules. To this we must add the additional rules for audit, remediation, and migration that are required to support the development of Master Rules in arrears viz. ‘after the fact’.

Audit is the simplest of our three processes and requires new rules to mirror the intent of the originals (e.g. revalidation, recalculation); plus an overlay of semantic validation rules (colloquially described as ‘common sense’ rules); and rules which generate validation ‘alerts’ (i.e. new data) as required to give effect to the purpose of the audit.

Remediation includes both the baseline and audit rules as above, but also usually includes a substantial and complex overlay of rules to correct and make good any errors. When we identify incorrectly calculated amounts in past periods it is not sufficient to simply recalculate those values. Each recalculated value must be reapplied to the full entity level dataset. This can entail pro-rating over extended periods of time both backwards and forwards, plus recalculation of all subsequent values with compounding effects applied – and this is often recursive, that is, as downstream amounts are updated, they in turn cause more recalculations, and so on.

Imagine a 35 year pension scheme with thousands of calculation variations over time, but with some original amounts deposited in the wrong fund. Or, one system in the hazy past ignored leap-years, thereby missing accruals for several days per decade. Now imagine the effects of correcting those original errors on the account, the fund, and on all related balances.

In some cases, entirely new entity level datasets need to be built to support remediation, for instance, daily accrual data that does not exist in the source data.

Then additional rules need to be applied to calculate any remediation outcome as new data values, and these can also introduce new complexity – e.g. beneficial changes for the customer may be handled differently to disadvantageous changes. Finally, correcting entries may need to be generated to update the original system, and to inform the stakeholders including customers, auditors, and regulators.

Migration certainly requires the audit rules, and may also require remediation, either as part of or prior to the migration itself. Provided that these have been achieved successfully, then the remaining migration rules are primarily transformative between the source system formats and the target system formats, bearing in mind that most migrations involve more than a single source and target system.

The transformation rules will often mean interpreting the in-bound data and casting it into a format that is understood by the target system. For instance, all variations of true/false (Y/N, field present, field not present, field starting with letter ‘A’, and a limitless number of other variations designed by old-school programmers to avoid creating a clear, self-defining Boolean value) should be converted into a single industry standard format (say, true/false); for older systems, multi-use fields (even bit switches) need to be deconstructed into multiple single use fields (sometimes dozens); and most enumerations will need to be converted, often on a many-to-many basis. Finally, the data needs to be thrown to a new field, possibly a new datatype, and attached to a new key before finally being exported into the target system(s). All of these transformation activities are designed to improve data quality.

In summary, audit, remediation, and migration add significant layers of rule complexity to the existing population of ‘business rules’.

So how do we approach this substantial task?

Getting the Rules Right is a High Stakes Game

Deriving rules from operational systems is a fraught task – after substantial effort, how do you PROVE that you have understood the rules, and therefore also the data, correctly, consistently, and completely.

It is not enough to just get the rules right; we must get the rules right for each and every transaction, and this cannot be taken for granted. For instance, we have seen premium calculations that recalculated critical dates inside the calculation itself for one or two product types only, with no record of the newly derived dates being kept at all. This affected the premium amount for 10’s of thousands of policies in a 300,000 policy portfolio; hundreds of thousands of dollars variation per premium cycle, potentially occurring for decades.

For an audit or remediation, the legal horizon for correctness is typically around 10 years. If we are migrating a system that has implemented published customer obligations and promises (e.g. financial product disclosure statements), that horizon becomes the full life of the entities in the dataset – not the system, but the dataset. The 35 year audit and remediation that we referred to earlier was migrated over three entirely different systems during that period – and not all of the migrations were ‘clean’. This required addressing such novel concepts as changing decimal precision in the calculations either side of the boundaries of the system changes.

The need for correctness at the transaction level is critical. If you unknowingly have one transaction wrong, you might unknowingly have 100,000 wrong – how can you prove otherwise, unless by actually proving that they are all correct.

It is the difficulty surrounding this transaction level proof-point that makes system migrations in particular such a high risk endeavour – Bloor quotes ‘a survey of UK-based financial services firms in 2006 found that 72% of them considered data migration to be “too risky”’[4].

And with good reason. The Insurance Innovation Reporter states: “A Bloor Research whitepaper estimates that ‘38 percent of DM projects end in failure’—a disheartening statistic for any CIO facing a data migration project”[5].

We think 38% is an under-estimate, and it also does not convey the existential risk that a failed legacy upgrade or migration represents to its host organisation.

Existential risk is highlighted in this report about a failed migration from CIO magazine “Cigna lost a large number of employer accounts last year and watched its stock plunge 40 percent.”[6]

While it is difficult to make or obtain public statements for obvious reasons, we are aware first-hand that multiple tier one insurance migrations have recently failed or are not achieving their original objectives, with some of these now in court. In New Zealand, most of the insurance industry is now overseas owned, after the major local insurers succumbed one after the other to the risks posed by failed IT upgrades or migrations.

If you get a migration wrong, your organisation is in jeopardy. Correcting rules errors in the target system is implausible if there is no clear definition of correctness in the rules requirements in the first place (that is, Master Rules) – rules development by trial and error in a new platform is not for the faint-hearted. Even the limited degree of truth represented by the old legacy system has now gone!

And risk of failure is compounded by legal jeopardy. FinLedger reports on an $80m fine for “failure to establish effective risk assessment processes prior to migrating significant information technology operations to the public cloud environment”[7].

In Australia, substantial multi-million monthly penalties are now being levied on financial organisations that have failed to meet their obligations due to system level failures, specifically including migration failures.

In New Zealand, substantial penalties are being levied by the Labour regulator on organisations that have failed to meet legal obligations to their employees because of payroll system inadequacies. The background to this use case can be found in our award winning submission to the Business Rules Excellence Awards[8].

At a slight tangent, but interesting because of its scale, Australia has recently imposed a $1.3billion penalty on Westpac for inadequate due diligence re money laundering[9], while NAB has had a substantial penalty for errors in charges[10]. In both of these cases, we assert that the lack of Master Rules Management is a contributing factor.

System upgrade or migration can be motivated by many things, but frequently at the core is a need to gain oversight and control of the rules that implement business policy. This in turn may be motivated by commercial and/or regulatory pressure.

In a brief but compelling article by Sapiens[11], the author outlines many corporate failures that are ultimately blamed on “problems caused by data ignorance, fragmented systems and an inability to supervise one’s own processes”. And from the same Sapiens article: “Moody’s recently reviewed what makes insurance companies fail, and one of the primary reasons was that some insurers did not know their pricing models. To avoid “selling a large number of policies at inappropriately low prices, an insurer will need to have systems and controls in place to quickly identify and address cases where the pricing algorithm is under-pricing risks”, says Moody’s.”

All of which would be mitigated by implementing Master Rules Management.

Our conclusion: Sooner or later, implementing Master Rules Management will become an imperative that cannot be avoided, whether because of regulatory pressure, M&A, the need to de-risk a migration, or a need to improve rules governance to mitigate profound commercial risks. When that time comes, it will not be optional.

How We Do It

Rules are not just important artefacts in their own right; they are the only way that you can claim to correctly understand the data and its downstream processes. And correct understanding of the data is the key to correct operation of the system, thereby meeting legal and professional obligations and avoiding the jeopardies outlined above.

In order to build Master Rules in arrears, it is necessary to be able to process the ‘Master Rules’ as a mirror image of the implemented system rules for each and every value, for each and every entity in the system – past, present, and future. Whether doing an audit, a remediation, or a migration, the Master Rules need to be independently verified. These externally verified ‘Master Rules’ are processed in parallel with the system’s own rules to derive real-time mirror image data, which are then tested in-stream for deltas. That is, we create two values for each rule-derived attribute, and we calculate the difference, if any, between them.

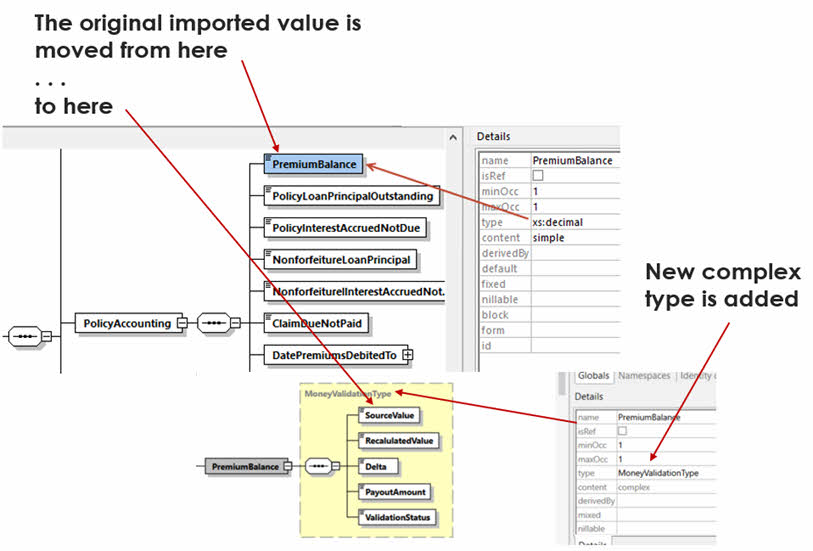

Figure 1: A Simple Design Pattern for Rules Validation

In the diagram above, we can see a value imported from a source system [Premium Balance, in this case from the old Life system CLOAS] that is subsumed into a bigger ‘complex type’. This basic mapping is fully automated in our data extraction processes. Inside the complex type, we have the mirror value (Recalculated Value) that is calculated by the audit/remediation/migration process; then the raw delta; then the adjudicated payout amount (if a remediation); and finally an adjudication as to whether there is rule level equivalence in the original and reference rules. This last item is needed because an exact match may not be plausible. For instance, if decimal precision has changed, small variations might be permissible. Also, variances in the customer’s favour may be treated differently to variances that are disadvantageous to the customer. Consequently, the decision regarding equivalence in the calculated values is nuanced.

We also use rules to create validation ’alerts’ to inform SME’s about the state of the data on a record by record basis, and to drive manual remediation activities if required.

With this simple design pattern in place, we can easily provide a management dashboard that shows in real-time:

- The pass/fail ratio – the numbers of inbound fields that are being correctly re-calculated, and therefore are shown to be correctly understood;

- And by extension, the number of entities (customers, policies, et al) passing or failing;

- The total imbalance – the sum of the deltas;

- The cost of any remediation payout - the sum of payout amounts;

- The amount sacrificed without payout – the difference between the deltas and the payout amounts;

- The number of validation alerts raised, by alert type and entity type;

- And any other statistics of interest that can be derived by rules.

This entire process can easily be run on a daily basis as our Master Rules grow, and as our ability to mimic and validate the source systems grows accordingly. The outcome of this iterative mirroring process is a new set of rules that are the true ‘Master Rules’ for the subject at hand.

In our approach, these rules are built declaratively without code (‘no-code’ is the favoured term now) by SMEs, and they live externally to the system as XML and as system generated ‘logical English’, along with supporting documentation; and also as auto-generated Java and/or C# code, so that they simultaneously both describe and implement the rules.

By virtue of the automated validation process just described, the now fully formed Master Rules can be shown to be 100% correct, consistent, and complete by recalculation and comparison of outcomes across any size dataset, any number of entities. And because the rules are fully executable, they can be used for ongoing audit of any remediated or migrated portfolio.

Following audit, remediation, and/or migration, the Master Rules become the reference ruleset for the entire business. As we described in our article “The Case of the Missing Algorithm”[12], these rules can be substantial – extending to embrace all rules across the full extent of the organisation.

And because the rules are both machine and human readable, the Master Rules become the source not only for all systems, but also for all human disclosure and management requirements – fulfilling the immediate promise of Master Rules Management.

And Then the Benefits Kick-In

When we have completed the above, the organisation has recovered the state of rules management to that anticipated by the Beast article. The rules have been captured and made real, and shown to be correct, consistent, and complete.

But why stop there? With a full set of provably correct rules that are also fully executable, we can now look to inject these rules back into the underlying systems for real-time execution in day-to-day operations. It is a simple step to move from monitoring and compliance, to automated rules in operational systems, which is easily achieved using any number of techniques from internally compiled and executed rules, to exits and APIs, to full blown external rules engines.

When this is achieved, Master Rules Management can also be used for forward looking simulations and champion/challenger strategizing. Rules management becomes proactive and provides a natural feedback mechanism to inform the formulation of business policy. This symbiosis between policy and rules is the ultimate promise of Master Rules Management.

We can start asking things like: if we were to forgive these fees under these conditions, what would it cost? How many customers would be affected? Then this impact and cost (derived from the deltas described earlier) would feed into the policy development process, at which point the business can say, is this cost worth the improved customer relationships that it will foster – the interplay of rules derived feedback and judgement based policy formulation becomes one seamless process.

Conclusion

Master Data is a concept that most IT shops are familiar with; Master Rules is not.

Master Data cannot address the issue of data quality without pairing it with the rules that define and/or derive that data; that is, the Master Rules.

Sooner or later, all significant financial sector organisations (in particular) will confront an impending migration, regulatory pressure, M&A, commercial imperative, or other compelling need to improve the management of their business rules; then, it must be done – Master Rules must be implemented to provide the authoritive view of rules that their importance requires and deserves.

Finis

Author: Mark Norton, CEO and founder, IDIOM Ltd

Mark is the CEO and founder of IDIOM Limited. Throughout his 40 years in enterprise scale software development, Mark has been active in the development and use of business centric, model driven design approaches.

In 2001 Mark led a small group of private investors to establish IDIOM Ltd. He has since guided the development program for IDIOM’s ‘no-code’ business rules automation products, and has had the opportunity to apply IDIOM’s tools and approaches to projects in Europe, Asia, North America and Australasia for the benefit of customers in banking and insurance, health, government, and logistics.

Mark Norton | CEO and Founder | Idiom Limited

+64 9 630 8950 | Mobile +64 21 434 669 | Australia free call 1800 049 004

2-2, 93 Dominion Road, Mount Eden, Auckland 1024 | PO Box 60101, Titirangi, Auckland 0642, New Zealand

[email protected] | idiomsoftware.com | Skype Mark.Norton

References/footnotes:

- http://www.modernanalyst.com/Resources/Articles/tabid/115/ID/1354/Requirements-and-the-Beast-of-Complexity.aspx

- https://www.dataversity.net/a-brief-history-of-master-data/

- https://www.gartner.com/en/information-technology/glossary/master-data-management-mdm

- business-centric-data-migration.pdf, available from Bloor Research, https://www.bloorresearch.com/

- https://iireporter.com/10-ways-to-reduce-the-risk-of-data-migration-during-legacy-replacement/

- https://www.cio.com/article/2440140/integration-management---cigna-s-self-inflicted-wounds.html

- https://www.housingwire.com/articles/the-occ-slaps-capital-one-with-80m-fine-over-cybersecurity-risk-management-practices/

- http://www.idiomsoftware.com/docs/idiom-brea-submission-deloitte.pdf

- https://www.zdnet.com/article/westpac-agrees-to-au1-3-billion-penalty-for-breaching-anti-money-laundering-laws/

- https://www.smh.com.au/business/banking-and-finance/nab-fined-57-5m-in-fees-for-no-service-case-20200911-p55uvn.html

- https://www.sapiens.com/blog/a-failed-legacy-the-dangers-for-insurers/

- https://www.modernanalyst.com/Resources/Articles/tabid/115/ID/5127/The-Case-of-the-Missing-Algorithm--And-what-has-Haecceity-got-to-do-with-it.aspx