(Part 1 - A Paradigm for Processing Complex Events in Real Time)

The diagrams in this article are drawn from materials copyrighted by Knowledge Partners International LLC and IBM Corporation.

While adoption and usage of The Decision Model (TDM) has been increasingly growing since 2009, The Event Model (TEM) started construction only in 2012. A team of three people from IBM Research – Haifa, one from IBM Global Services, and two from KPI[1] joined forces in search of a common goal. That goal was to improve the current event processing paradigm with a model similar to The Decision Model. This two-part series introduces the result of that joint study.

If you are familiar with The Decision Model, the similarities and differences will be well understood. If you are not familiar with The Decision Model, you need not worry. This series will still be useful because The Event Model is meant to be easy to understand.

Today, The Event Model is in its early stage just as The Decision Model was prior to its publication in 2009. Even so, already there is a lot of interest in The Event Model. One reason is that the general understanding of events, even in everyday life, seems intuitive to most people more than was the formal understanding of business logic, business rules, and business decisions back in 2008. Furthermore, business people understand the necessity to analyze and act on events as they happen. Another reason is that organizations are struggling with event processing as a result of new regulations, the Internet of Things[2], and the promise of Big Data. This two part series lays a foundation for a basic understanding of The Event Model. It does not address technology tasks that are needed to deploy event models into executable code.

Objectives

In this Part 1, there are three objectives. The first is to revisit lessons from past innovations. It may seem unimportant to revisit lessons from past history about similar models. To the contrary, it is quite important because revisiting past successes can crystallize an exciting future. Moreover, it can pave the way to an exciting vision. You may find it fascinating to be on the edge of research that is new, promising, and needed. The second objective is to introduce the event paradigm. The third is to provide a first glance at The Event Model in preparation for its details in Part 2.

Lessons from Past History

We begin with the past to point out the value of where we have already been and where we are today. In particular, we revisit two unique models that changed the way we do things: the relational model and The Decision Model. But, most important are the lessons these models taught us that we now take forward into the world of event modeling.

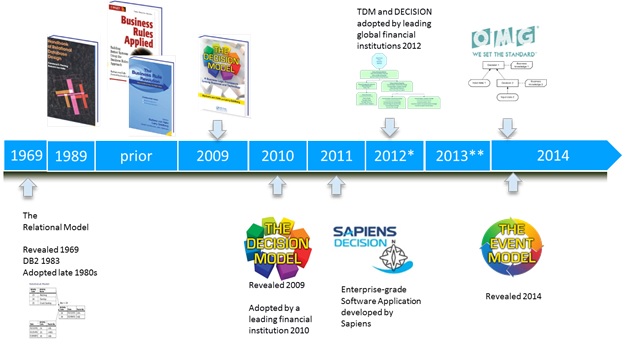

The journey to TEM begins with the timeline in Figure 1. If you have lived during its early years, you understand why it is a powerful timeline. If you have not, you may discover insights that today you take for granted but that are quite intriguing and inspiring.

Figure 1: Timeline of Inspiring Models

First Time

The first important part of the timeline is that the relational model was revealed in a paper by Dr. E.F. Codd at IBM in 1969. It was a predefined abstract data model that was technology independent. But those words “abstract model” and “technology independent” were unfamiliar phrases back then. From this model emerged relational systems as we know them today. But what many people don’t know (or have forgotten) is that these were the first systems ever to be constructed in accordance with the prescriptions of a predefined abstract model. In other words, the relational model existed before there was specific relational technology.

Relational systems from IBM appeared in the 1980s and adoption happened later and slowly. Twenty years after 1969, Fleming and von Halle published a book (as did other people) on how to correlate the various data modeling diagrams of the 1970s with the relational theory from Dr. Codd.

Second Time

The second important part on this timeline is that, prior to 2009, the business rule space had no universal modeling diagrams or modeling theory. So, von Halle and Goldberg, after testing The Decision Model with clients, published a book that pulled together new decision model theory and a diagramming technique for the better management of business rules and business logic.

In 2010, The Decision Model was adopted by a leading financial institution even without commercially available software. The years 2011- 2012 saw the spread of decision modeling and supporting enterprise software in global financial institutions. And most recently, in January 2014, the OMG (i.e., the IT standards group called Object Management Group) published a new standard called Decision Model and Notation. This new standard legitimizes decision modeling as a new discipline and new software marketplace.

Third Time

The third important part of this timeline is the focus of this series.

Specifically, in 2013, KPI joined the IBM Research – Haifa team to model events. Simply put, we combined a diagram notation with appropriate event-based science while keeping it business-friendly and technology independent.

For the purpose of comparison, consider that the logic in The Decision Model represents the correlation of fact-based conditions to corresponding fact-based conclusions, the latter in The Decision Model are called business decisions. An example is the logic leading to a conclusion as to whether a student qualifies for financial aid using the raw data provided in that student’s financial aid application. That is, raw input data is evaluated, interim conclusions are reached, and correlations among raw data and interim conclusions lead to a final student financial aid eligibility conclusion.

On the other hand, the logic in The Event Model represents the correlation of event-based conditions to corresponding event-based conclusions, the latter in The Event Model are called situations. An example from recent events is the logic leading to a conclusion as to whether a plane’s behavior qualifies as being suspicious. That is, raw events produced by sensors, satellites, and systems are evaluated, interim (derived) events are reached, and correlations among raw events and derived events lead to a final suspicious plane situation.

Five Lessons Learned From History

Before delving into the event paradigm, what did we learn from the past? The relational model has stood the test of time[3] and The Decision Model[4] should do likewise. Table 1 summarizes five lessons learned from this history to apply to the idea of an event model.

| Five Lessons Learned |

| 1 |

Pre-defined technology-independent model |

| 2 |

Business-friendly structure

|

| 3 |

Principles addressing structure, technology-independence, and optimum integrity

|

| 4 |

Theory of normalization for minimal redundancy

|

| 5 |

Model-driven code generation

|

Table 1: Lessons Learned From the Past

First, a predefined technology independent model has proven to be valuable. It represents a vision first and supporting technology to follow.

Second, a business-friendly structure also has value in separating business representation from technology-specific representation. Specifically, a top-down structural diagram supported by corresponding logic tables has proven to be intuitive and understandable by business people.[5]

Third, underlying principles behind models are valuable because they prescribe the structure, keep it technology independent, and define optimum integrity of the content within that structure. Most importantly, they also provide a means for model validation.

Fourth, a solid theoretical foundation of normalization is valuable. It reduces the content of each model to its minimal representation which saves overhead and reduces errors of future maintenance.

And finally, model-driven code generation is not only good, it opens the door to many advantages. The Holy Grail is to generate code directly from the model so that non-technical people can create the models (or at least understand them), possibly validate them against principles and with test cases, and IT can deploy them to target technologies. In other words, it closes the gap between business and IT.

So while each of these models is different from each other, these five characteristics are common and deliver proven value: technology independence, business-friendly, supported with principles, based on normalization, and amenable to code generation.

The Event Paradigm: Situation Awareness

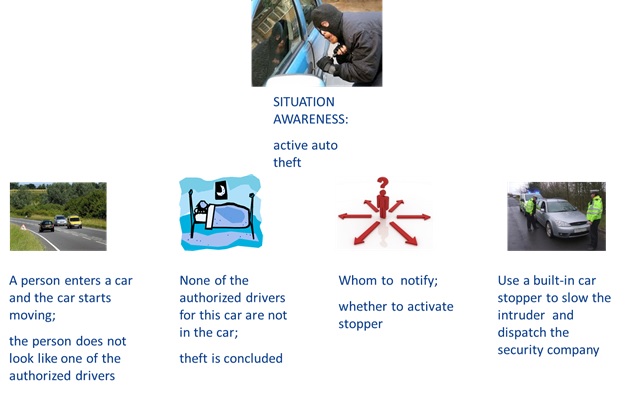

Essentially, the event paradigm is about “situation awareness.” To illustrate this we use the example in Figure 2. At the top of Figure 2 is a situation we want to know about. Specifically, it is the situation of a car theft happening right now.

What is Situation Awareness?

According to Gartner[6], situation awareness simply “means understanding what is going on so that you can decide what to do”. To understand the event paradigm leading to this situation awareness, the left most photo in Figure 2 detects that a person has entered a car and the car has started to move and derives that the person does not resemble any of the authorized drivers. The second photo in Figure 2 further derives that none of the authorized drivers are in the car and we become aware of a situation of an active car theft.

Figure 2 then decides whom to notify and whether to activate a means for stopping the car. If so, the activated stopper slows down the car and a dispatch notification goes to the security company. This, in a nutshell, is an example of the event paradigm.

Figure 2: Sample Situation Awareness

So, the event paradigm is the processing of events and their data in (near) real-time, potentially from multiple input sources, to create situation awareness and react to it. The real-time aspect along with the sense and react of the event paradigm differentiates it from other paradigms. For example, recording events, storing their data and analyzing them later, does not represent the full richness and uniqueness of the event paradigm.

The Four Steps in the Event Paradigm

Let’s now understand the four steps in the event paradigm by using Figure 3.

Figure 3: The Four Steps in the Event-Driven Paradigm

It starts with the first D to detect raw “happenings” such as bank account activity, signals from a medical device, or signals from an airplane. Logic applied to these raw events brings us to the second D to derive a resulting event. A resulting event, called a derived event, is a happening of greater magnitude and importance than simply the individual raw happenings viewed in a vacuum. There can be many derived events[7] before reaching the ultimate event-based conclusion. The ultimate event-based conclusion or derived event in The Event Model is called a situation[8].

Once a situation is derived, the third D for decide means to determine what to do about it. Once it is determined what to do about it, the final D comes into play, which is simply do it!

So the detection of raw input events as input and the application of logic to arrive at derived events and eventually to arrive at the final situation of interest is what happens in The Event Model. Deciding what to do about it may happen in The Decision Model. The reaction to it may happen in a process model or task.

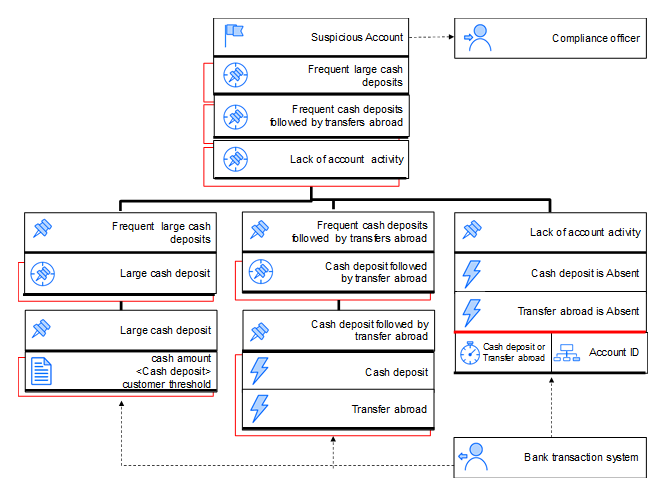

Figure 4 illustrates the inherent complexity in the event paradigm among event detection, timing, and situation awareness, using an example of suspicious account derivation. Let’s assume that we want to derive that an account is suspicious whenever we detect three large cash deposits in a sliding time window of ten days.

First, the situation is driven by events (event-driven logic). Second, the situation is usually “hidden” and is the result of analysis of multiple events. Third, the situation is valuable and relevant in temporal windows. Furthermore, the situation should be derived as soon as it happens (at the end of the temporal window) so that the reaction and decision can be made in real-time.

Figure 4: Insights into event derivation complexity

What are Events and why are They Important?

Moving from the event paradigm to event basics, an event is simply a happening anywhere in the world – a traffic light changes, a college student graduates, some money is deposited in a bank account and other money is withdrawn from a bank account. Some events are related to others in obvious ways – a batter in baseball incurs a third strike (an event) and therefore incurs an out (another event). Other events are not so obviously related, perhaps on purpose, such as events leading to money laundering or other fraud.

Today events are ubiquitous and more accessible than before because it is possible to harness worldwide happenings and related information through the Internet, operational systems, a wide variety of devices, or other means; and over time. Applying logic to them to establish hidden relationships allows for the derivation of current, past, or future happenings to react to. In other words, the ability to relate individual events to each other as they happen[9] through logic can lead to a conclusion or situation that is greater in importance and impact than the individual events themselves.

These event-derived conclusions or situations may be good, such as upcoming marketing trends. They may be critical happenings such as pending emergencies. They may also be dangerous such as suspicious activity on a bank account or within a public place.

Difficulties of Event Processing Today

Just as data existed before the relational model and business rules and logic existed before The Decision Model, events existed before The Event Model. So, how do people today detect and react to them?

One option is through process modeling. This is similar to how people dealt with data, business rules and logic. Before appropriate models, there was no model just for representing data or business rules. It turns out, just as process models are not the most appropriate way to represent data or business rules, process models for event logic is not a desirable solution for four similar reasons:

- Not all events fit within a well-defined process because processes are sequential and task-driven, not event-driven.

- Event logic in a process model is hidden and buried.

- Event logic is difficult to define in process model notation.

- Event logic is also cumbersome to change.

Another option is to represent event logic directly in program code much as some organizations have done with business rules and logic prior to The Decision Model. Yet, program code is not an ideal solution for representing event logic for four reasons:

- Program code is not understandable by business people.

- Such logic gets lost in the code.

- Program code is difficult to define.

- Program code is cumbersome to change (and therefore to maintain) especially if there is no direct link from what the business person wanted and the program code itself.

So, essentially, use of program code loses the business audience and their ability to govern the event logic.

A third option is applying software tools specific to event processing. Ideally, this could be the ultimate solution. However the adoption of event processing tools over the last years has been very low and most organizations still implement event-driven applications using program dedicated code. The main reason for the low adoption of event-processing tools is the fact that these require a high level of IT expertise in understanding the programming language and semantics of the tool and make them impractical for business users.

The Promise of the Future

With The Event Model, there is a diagram dedicated to event representation and supported with corresponding event derivation tables. The diagram has business-understandable labels, a specific structure, and rigor.

The benefits to The Event Model are as follows:

- A simple diagram designed specifically to derive event logic

- No program code required for understanding

- A business-oriented glossary

- Absence of technical terms or artifacts

- Independent of technology

- Supported by integrity principles, and

First Glance at The Event Model

Before introducing The Event Model, it is important to understand its origins.

Recent History for The Event Model

Consider another timeline shown in Figure 5.

Figure 5: IBM Event Research Timeline

The IBM Haifa research team was under the direction of Dr. Opher Etzion. This is a timeline that starts with his contributions to the event processing world. Of great importance is that in 2010, the event processing team in Haifa received the highest IBM award for “groundbreaking research on event processing – establishing IBM as a leader in the new field.” Opher co-authored an authoritative book on event processing, called Event Processing in Action[10].

The IBM event model team consisted of three other people: Dr. Fabiana Fournier (from Israel), Sarit Arcushin (from Israel), and Jeff Adkins (from the USA). The KPI event model team consisted of Barbara von Halle and Dr. Larry Goldberg (both from the USA).

The Event Model Definition

The Event Model is an implementation-independent model understandable by business and technical audiences. It depicts the logic of detecting and deriving a situation of interest from a stream of open-ended event instances. It is a model for event logic that should be easier to create and understand than techniques for event processing in use by organizations today.

Three principles about the intentions for the model are:

- TEM is functionally complete in that its content is sufficient to generate executable code enforcing it.

- TEM is independent of any physical implementations.

- All TEM changes are performed only on the TEM and never again directly on generated (or created) program code.

The Event Model has more pieces than does the relational model or The Decision Model because of the complexity of event processing. These pieces include consumers, producers, data, events, contexts, and so forth. There is also a glossary and a set of event model principles.

TEM Vocabulary

Let’s solidify and consolidate definitions. In TEM, an event is an occurrence of something that has happened that is of interest to a business and is published so that we can detect it. We detect and publish it because it may require a reaction on the business’s part.

A raw event is simply the raw output of an event producer. An example of a raw event is a cash deposit into a bank account and the (event) producer is human if a person is doing the depositing or banking transaction systems in most businesses. Raw events in The Event Model are similar to raw data in the Decision Model.

A derived event, as already explained, is the output or conclusion of applying event logic on input events. An example is that a large cash deposit has been made into a bank account. The event logic, in this case, contains the criteria for qualifying a specific cash deposit as being a large one. So, a derived event in The Event Model is similar to inferred knowledge or interim conclusion in The Decision Model.

A situation is the final conclusion from an entire event model and it has at least one consumer who is interested in it. This means that it is published to the consumer who can react to it. A situation in an event model is similar to the conclusion of a decision model because both are the final result of modeled logic and both are published external to the model. A decision model usually publishes its conclusion to a subsequent process task while an event model publishes its situation to something or someone that can make a decision about a reaction.

The Event Model Diagram at a Glance

An event model diagram is, therefore, a visual representation showing the structure of the logic behind event derivation. The representation has a recognizable structure specific to the characteristics of event derivation and is not the same as another kind of model.

Figure 6 contains an Event Model Diagram to derive a situation of suspicious account. Without too much trouble, you can probably identify the consumer as the compliance officer and the producer as the banking transaction system. Perhaps you are able to determine that three derived events appear to be in the top structure, supported by three branches of logic. Each branch eventually seems to end with raw input data or raw input events. These and other aspects of a TEM diagram are explained further in Part 2.

Figure 6: Event Model Diagram

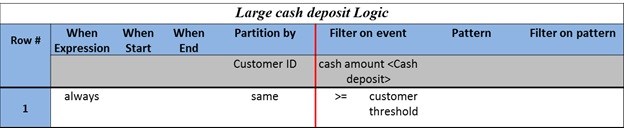

The Event Model Derivation Table at a Glance

Behind each structure in an Event Model Diagram is its corresponding event derivation table which contains the event-driven logic.

An event derivation table has two parts (separated by a red vertical line): one part for Context and one part for Conditions. By Context we mean how we partition (i.e., group) the event input occurrences so these partitions can be analyzed and processed separately. One aspect of Context is a specific Partition by column. In the example of Table 2, this column groups the input events (bank deposits) by Customer ID such that for each customer we detect whether there has been a large cash deposit.

Table 2: Event Derivation Table

Another aspect of Context is time. That’s because The Event Model needs to evaluate input over time, not just a static input (such as a mortgage application or a healthcare claim). It detects various “happenings” over time, in real time, and doing so within a grouping based on time, as appropriate.

In the example in Table 2, there are three context column headings for time-related considerations; When Expression, When Start, and When End. They are followed by a partitioning column. These four columns divide the raw input into partitions so that the conditions operate on a whole partition.

There are also three kinds of conditions in an event derivation table, called Filter on event, Pattern, and Filter on pattern. In Table 2, a Filter on event condition determines if the amount of a deposit was larger than that allowed for the specific customer.

Again, more details on event logic specification are in Part 2. For now, keep in mind that all conditions in one row evaluate the input event occurrences WITHIN THE CONTEXT where the context is also defined in that same row.

Coming Soon in Part 2

If you find The Event Model intriguing and useful, be sure to check out Part 2 of this series. Part 2 covers how to do high level design in TEM and walks you through the creation of an event model diagram and its corresponding event derivation tables.

Authors: Barbara von Halle, Managing Partner of KPI LLC & Dr. Fabiana Fournier, Researcher at IBM Research.

Barbara von Halle is Managing Partner of Knowledge Partners International, LLC (KPI). She holds a BA degree and the award in Mathematics from Fordham University and an MS degree in Computer Science from Stevens Institute of Technology. She has 30 years of experience in data architecture, consulting to major corporations on enterprise data management. As The fifth recipient of the Outstanding Individual Achievement Award from International DAMA, she was inducted into the Hall of Fame in 1995. Her first book, Handbook of Relational Database Design continues to be a standard reference in database design. She was the most popular columnist in the leading publication, Database Programming and Design magazine for over five years. More recently, she co-authored The Decision Model: A Business Logic Framework Linking Business and Technology published by Taylor and Francis LLC 2009. She is a named inventor on two patents: Business Decision Modeling and Management Method (USPTO 8,073,801) and Event Based Code Generation (filed). She can be reached at [email protected].

Barbara von Halle is Managing Partner of Knowledge Partners International, LLC (KPI). She holds a BA degree and the award in Mathematics from Fordham University and an MS degree in Computer Science from Stevens Institute of Technology. She has 30 years of experience in data architecture, consulting to major corporations on enterprise data management. As The fifth recipient of the Outstanding Individual Achievement Award from International DAMA, she was inducted into the Hall of Fame in 1995. Her first book, Handbook of Relational Database Design continues to be a standard reference in database design. She was the most popular columnist in the leading publication, Database Programming and Design magazine for over five years. More recently, she co-authored The Decision Model: A Business Logic Framework Linking Business and Technology published by Taylor and Francis LLC 2009. She is a named inventor on two patents: Business Decision Modeling and Management Method (USPTO 8,073,801) and Event Based Code Generation (filed). She can be reached at [email protected].

Dr. Fabiana Fournier is a researcher in the Event-Driven Decision Technologies group at IBM Research - Haifa, holding a B.Sc. in Information Systems, and a M.Sc. and a PhD in Industrial Engineering from the Technion - Israel Institute of Technology. Dr. Fournier has over fifteen years of research, practical experience, and numerous publications in the areas of event processing, organizational and process modelling, business transformation, and management. Her research areas also include business process management, business operations, enterprise architecture, and service-oriented architectures. Since she joined IBM Research – Haifa in 2006, she was involved as a designer and modeler of event processing applications in various domains such as financial risk and compliance, anti-money laundering, logistics, and agri-food. Dr. Fournier received various awards, including the IBM Outstanding Technical Accomplishment Award for her work on component business modelling, systems engineering in the aerospace and defense, and artifact-centric processes. She is a named co-inventor on two patents: Method and Apparatus for an Integrated Interoperable Semantic System for carrying out cross-tool cross-organization System Engineering (filed) and Event Based Code Generation (filed). She can be reached at [email protected].

Dr. Fabiana Fournier is a researcher in the Event-Driven Decision Technologies group at IBM Research - Haifa, holding a B.Sc. in Information Systems, and a M.Sc. and a PhD in Industrial Engineering from the Technion - Israel Institute of Technology. Dr. Fournier has over fifteen years of research, practical experience, and numerous publications in the areas of event processing, organizational and process modelling, business transformation, and management. Her research areas also include business process management, business operations, enterprise architecture, and service-oriented architectures. Since she joined IBM Research – Haifa in 2006, she was involved as a designer and modeler of event processing applications in various domains such as financial risk and compliance, anti-money laundering, logistics, and agri-food. Dr. Fournier received various awards, including the IBM Outstanding Technical Accomplishment Award for her work on component business modelling, systems engineering in the aerospace and defense, and artifact-centric processes. She is a named co-inventor on two patents: Method and Apparatus for an Integrated Interoperable Semantic System for carrying out cross-tool cross-organization System Engineering (filed) and Event Based Code Generation (filed). She can be reached at [email protected].

1. KPI stands for Knowledge Partners International LLC

2. Internet of Things means “embedding chips, sensors, and communications modules into everyday objects” per Viktor Mayer-Schonberger and Kenneth Cukier in Big Data, 2013, First Mariner Books

3. Granted, the relational model is a product from the “small data” era. Today, we are entering the “big data” era where more, perhaps messy data is more valuable than less, cleansed data for seeing a bigger picture. So, the data world is evolving. Regardless, the idea of an abstract, pre-defined model based on science was a breakthrough and still has benefits.

4. The Decision Model may evolve further as it is young in its evolution.

5. Other representations are possible, but this combination was chosen because it has a long history of acceptance.

6. Gartner report G00246894, July 2013

7. In the general world of event processing, derived events are sometimes referred to as complex events or situations.

8. In The Event Model, the word “situation” refers specifically to the derived event emitted to the outside world by an Event Model.

9. The derived event in (near) real time is an important part of TEM because of the relevant temporal window. Otherwise, queries to an off-line database will suffice.

10. Etzion, Opher and Peter Nesbitt, 2009: Manning Publications