It has been almost a year and a half since I published my experiences from my first decision modeling project in a chapter titled “Better, Cheaper, Faster” in the book, The Decision Model: A Business Logic Framework Linking Business and Technology by Barbara von Halle and Larry Goldberg1. Since then, I have completed eleven more decision modeling projects and pressure tested and stretched The Decision Model in each project in ways that I could not have originally imagined. I can confidently report that The Decision Model Principles have held up well in the toughest projects.

In this article, I explain a project completed in the financial services industry. A client asked me to lead a project to redesign a failed sub-process that had resulted in billions of dollars of backed up financial transactions. This particular financial process had a history of failed and abandoned process improvement projects. The pressure was on and, I must confess, I was not entirely sure that The Decision Model would be a good fit.

Before presenting the details of this project, I want to report that the results were impressive! The team that I led completed this project in ten weeks. We reduced the processing of 200 “transactions with issues” from 90 hours to 3 minutes and 30 seconds. We reduced the processing time of “transactions with no issues” from an average of 30 hours to 3 minutes and 30 seconds. The ten weeks included discovering and modeling the old process, decision modeling, process redesign, system development, and testing. This is pretty impressive considering that I initially was not entirely sure I could help them!

Therefore, let me start with an understanding of the project and how I used The Decision Model to achieve these results. From there I drill into the results a little deeper.

Project Approach and Steps

In the first step of the project, I led the team through the documenting of the business motivations2 for the project and aligning the motivations with business performance metrics. These will measure our success. For this, we use parts of the Business Motivation Model, which is an Object Management Group (i.e., OMG) specification addressing the practice of business planning. We use it to manage the project scope from a business perspective, keeping the team focused only on tasks that directly relate to achieving these business goals.

In the next step of the project, I led the team through discovering the current process and the decisions buried in it. As is typical on other projects, the “as-is” process had been documented in a recently created eighty page document also used to train new staff. The team quickly discovered that the document was difficult to understand, ambiguous, contained errors and was missing information. I find that most companies document their processes in a similar fashion.

No one is really at fault. After all, it took decades to understand the value of separating different dimension, such as separating data from process. It also took decades to understand that each separated dimension is best represented in its own unique way and unbiased by the other dimensions. Prior to The Decision Model, we did not have a unique way to separate decisions from process as their own standalone deliverable.

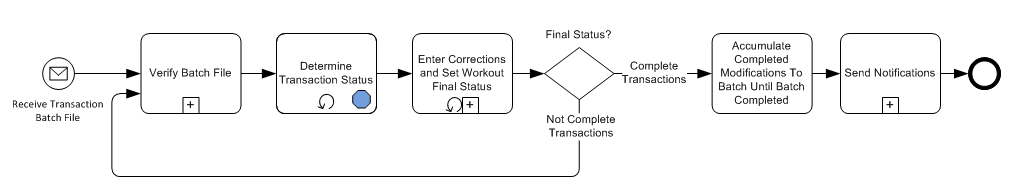

In spite of the existing documentation, the team quickly created a high-level process model and discovered the decisions in the process. We separated the process from the decisions, casting each decision into its own decision model. Separating the decisions from process models greatly simplified the process model. Figure 1 contains a generic version of the resulting high-level to-be process model.

Figure 1: High-Level To-Be Process Model with The Decision Model (click image for higher resolution)

The new process model illustrates the important improvement The Decision Model brings to existing process improvement methodologies. I’m a big fan of process improvement methodologies such as LEAN, Six Sigma, and other proven approaches and I have used them to improve and redesign many processes. However, until I learned about The Decision Model, process redesign and improvement were not as clear and straight forward as I was led to believe. This is because when decisions are separated from the process, as Larry Goldberg has observed to me (only somewhat tongue in cheek!), a process principally exists to collect data to make decisions and take appropriate action! With this in mind, I always ask a simple question: at what point in a process is it most valuable to make each decision? This question reveals a level of separation and abstraction that makes process improvement and redesign much easier to envision and more intuitive. The tangible separation of process and decisions takes process improvement and reengineering to a new level.

In the next step, the team completed the business logic in each decision model. No one on the team had previous decision modeling experience, with the exception of one team member certified in The Decision Model. I introduced the team to decision modeling by giving them a short primer. The team then dove directly into decision modeling. All of the business SMEs quickly grasped The Decision Model and, almost immediately, became our main project contributors and evangelists.

Confession about The Decision Model

In my introduction, I confessed that I was originally not sure that The Decision Model would be a good fit for this project. The reason for my hesitation was that this was primarily a Data Quality project. The Decision Model was originally advertised as an unlikely fit for data quality projects (in fact von Halle and Goldberg say as much in their book!). However, during a previous engagement, I was assigned the task of determining how The Decision Model could be used to solve some tough data quality challenges. After careful evaluation, I concluded that The Decision Model is an excellent technique for documenting and managing data quality logic. I wrote a paper documenting the data quality framework using The Decision Model. Portions of this framework were presented at the MIT 2010 Information Quality Industry Symposium, (see http://www.tdan.com/view-featured-columns/14295). A more complete version of the framework is in Table 1.

Table 1: Data Quality Framework

|

Data Quality Dimension

|

Definition

|

Repository of Data Quality

|

|

1. Completeness

|

The determination that no additional data is needed to determine whether a Single Family Loan is eligible for settlement.

Completeness applies to fact types that are always required, those that are sometimes required (depending on circumstances), and those whose population is irrelevant.

|

-

The Glossary will indicate, for each fact type, whether it is always required or always optional for the general context of a business process (applies to all circumstances regardless of process context)

-

Decision Model Views will indicate the logic by which a fact type is sometimes required or irrelevant (i.e., those circumstances are tested in the logic)

|

|

2. Data Type

|

The determination that a fact value conforms to the predefined data type for its fact type. For example; date, integer, string, Boolean, etc.

|

|

|

3. Domain Value

|

The determination that a fact value falls within the fact type's valid range.

|

-

The Glossary will indicate, for each fact type, its valid values

-

Decision Model Views will indicate the logic by which the domain for a fact type is further restricted for a specific process context to be compliant with Policy

|

|

4. Consistency

|

The determination that a fact value makes business sense in the context of related fact type/s fact values

|

|

|

5. Reasonableness

|

The determination that a fact value conforms to predefined reasonability limits.

|

|

|

6. Accuracy

|

The determination that a fact value (regardless of the source) approaches its true value. Accuracy is typically classified into the following categories:

- Accuracy to authoritative source: A measure of the degree to which data agrees with an original, acknowledged authoritative source of data about a real world object or event, such as a form, document, or unaltered electronic data from inside or outside the organization.

- Accuracy to real world data (reality): A characteristic of information quality measuring the degree to which a data value (or set of data values) correctly represents the attributes of the real-world object such as past or real time experience.

|

|

Once the team discovered the high-level process and decisions, I led them iteratively through populating the decision models, analyzing them for integrity, populating the project glossary, and updating the process model. We also frequently reviewed the business motivations with the SMEs to make sure that each decision’s logic aligned with its business motivations.

We iterated through the decision models and process model four times. Each iteration improved the decision models, process model, and eliminated business logic that did not contribute to the business objectives.

Decision Conclusion Messages

A critical success factor for this project was that each decision provides messages explaining the reason for each conclusion and the steps needed to resolve any issues. For example, if a decision determined that a transaction is not ready to move forward in the process, the rules engine needed to create a precise message explaining the issues that arose from the logic and exactly what needed to be done do to fix each issue. We had to implement separate messages for internal and external (customers) use also.

In traditional applications this functionality is complex, difficult to implement, and expensive. The Decision Model greatly simplifies it because every Rule Family row suggests a message. The business SMEs simply added messages to the Rule Family rows and the rules engine, following the messaging methodology, automatically displayed only and all messages relevant to a specific decision conclusion value.

Business Logic Transparency and Agility

Another critical requirement of this project was business decision logic that is easy for all stakeholders to understand and to interpret in only one way. The client had suffered through too many projects where this was not the case (as is the situation for most companies.) They no longer wanted business logic documented in a form (usually a textual statement) that stakeholders could not understand or where the business logic was subject to interpretation, and ultimately buried in the program code.

The Decision Model exceeded these expectations.

Testing the Decision Models and Process

Many years ago, I was in charge of my first enterprise process improvement and reengineering project where I implemented business logic across 130 countries. I came to believe back then that fully testing the business logic would be impossible. During that project, I consulted an expert from a BPM vendor who told me it is impossible to test all business logic, that we would likely only be able to test a small percentage of it in my project. He also told me that he had never seen a project where more than a small percentage of the business logic was tested. The Decision Model completely changes this.

Using The Decision Model testing methodology and automating it, the team thoroughly tested all of the logic in a short period. We quickly identified and fixed the source of each test case failure. And, whenever the SMEs asked for business logic to be changed, we were able to test the change and perform regression testing in a matter of minutes.

I normally dread the test phase of a project, but The Decision Model made this task a pleasure. The Decision Model is a testing game changer!

Executive Review Sessions

During this project, I held several executive review sessions. The top executives needed to understand the decisions and the business logic in each decision because these executives are responsible for those business decisions complying with the policy. These types of meetings are always challenging because the executives need to grasp a thorough, shared understanding of the business logic and process in a very short period.

I start these meetings by taking a few minutes to review the process model and point out where each decision occurs in the process. I then review decision models that are of interest to an executive to give an understanding of the structure of each decision. This generates discussion, and I am able to drill into Rule Families whenever an executive has questions or needs clarification of the business logic. Using this approach, I can guide an executive quickly from a comprehensive, high-level view to a detail view and back with amazing clarity and shared understanding.

I used to dread executive meetings because of the pressure to get participants to comprehend quickly a thorough, shared understand of a project. You can always expect the tough questions. I now look forward to these meetings because The Decision Model always delivers. The Decision Model methodology has not let me down in any of these meetings. The executive is always able to grasp the model and the business logic quickly!

Technology Implementation

IT implemented the decision models and process by creating a rules engine using Java. They initially coded each decision manually (even with manual coding, The Decision Model saved a significant amount of time) but they later improved it by adding the ability to run decision models directly from the Rule Families (We built the Rule Families using Excel). To deploy a decision model, we placed its Rule Families in a pre-determined directory and the rules engine automatically read the Rule Families and executed the decision. Whenever we update a decision model, there is no need for additional coding!

IT also automated the running of the test cases. When we completed a decision model, we documented the test cases in Excel and put them in a directory. The application automatically read the test cases and produced a detailed report. This dramatically reduced the time to complete the initial and regression testing cycles.

Conclusion

This decision modeling project was highly successful just like all decision model projects in which I have participated. In these projects, the business owners, SMEs, and IT became very excited and involved because they quickly saw that they could accomplish goals previously believed to be impossible. Here are a few of the comments that I have heard:

Comment 1: Within the first week of a project, one business SME told me that she had been working for years to accomplish what this project accomplished in a few weeks. She told me she previously could not envision a method to accomplish her vision because she had seen so many failed projects. She went on to tell me that her excitement was because the business logic was easy to understand, could only be interpreted one way, and it was not going to get buried in the code.

Comment 2: Another business SME told me that this was the most exciting project he had worked on since he was hired. He told me that he was excited because all of the logic was visible and easy for everyone to understand. This project quickly accomplished real results where other projects seemed to drag on and on, only to be abandoned or not achieve the project goals.

Comment 3: Within the first two weeks of another project, one SME told me that she had learned and accomplished more in two weeks than she had done in a previous project that took six months and failed.

You can see why I love my Job!

Author: David Pedersen is a Senior Decision Architect at Knowledge Partners International, LLC (KPI). He has over 25 years of experience in finance, technology, and developing solutions for global infrastructure initiatives for industry and non-profit organizations. Working with KPI clients, he leverages his diverse background and experience to implement BDM, BPM and Requirements solutions in a variety of industries.

Author: David Pedersen is a Senior Decision Architect at Knowledge Partners International, LLC (KPI). He has over 25 years of experience in finance, technology, and developing solutions for global infrastructure initiatives for industry and non-profit organizations. Working with KPI clients, he leverages his diverse background and experience to implement BDM, BPM and Requirements solutions in a variety of industries.

With over 3 years of experience in decision modeling, Mr. Pedersen is one of the most experienced decision modelers to date. He has created decision models rich in complex and recursive calculations, containing DQ logic, and simplifying complex business processes. His decision models include large ones, spanning many pages and having many Decision Model Views. He has led tightly scoped time-boxed pilots as well as projects in which decision models solved challenges in business transformation and crisis. As a leading authority on The Decision Model, Mr. Pedersen has contributed to its advancement including Decision Model Views, hidden fact types, data quality framework, and Decision Model messaging.

Prior to KPI, he served as a Director at Ernst & Young, LLP, where he led the development, implementation and support of complex global infrastructure systems. His work included Enterprise Architecture and the development and implementation of complex global business processes re-engineering/improvement initiatives that were among the firm’s top global priorities.

Mr. Pedersen is an author of many papers, a frequent contributing writer for the BPM Institute, BPTrends and a conference guest speaker.

Article edited by Barbara von Halle and Larry Goldberg.