Some organizations acquire and adapt purchased packaged solutions (also called commercial off-the-shelf, or COTS, products) to meet their software needs, instead of building new systems from scratch. Software as a service (SaaS), or cloud, solutions are becoming increasingly available to meet software needs as well. Whether you’re purchasing a package as part or all of the solution for a new project or implementing a solution in the cloud, you still need requirements. Requirements let you evaluate solution candidates so that you can select the most appropriate package, and then they let you adapt the package to meet your needs.

Some COTS products can be deployed out of the box with no additional work needed to make them usable. Most, though, require some customization. This could take the form of configuring the default product, creating integrations to other systems, or developing extensions to provide additional functionality that is not included in the COTS package. These activities all demand requirements.

If you are seeking a commercial package to provide a canned solution for some portion of your enterprise information-processing needs, first document your requirements. You don’t need to specify all of the nitty-gritty functionality details, but consider the domains of use cases or user stories, business rules, data needs, and quality attribute requirements. Next, do some initial market research to determine which packages are viable candidates deserving further consideration. Then you can use the requirements you identified as evaluation criteria in an informed COTS software selection process.

One evaluation approach includes the following sequence of activities:

- Weight your requirements on a scale of 1 to 10 to distinguish their importance.

- Rate each candidate package as to how well it satisfies each requirement. Use a rating of 1 for full satisfaction, 0.5 for partial satisfaction, and 0 for no coverage. You can find the information to make this assessment from product literature, a vendor’s response to a request for proposal (RFP), or direct examination of the product. Keep in mind that an RFP is an invitation to bid on a project and might not provide information that reflects how you intend to use the product. Direct examination is necessary for high-priority requirements.

- Calculate the score for each candidate based on the weight you gave each factor to see which products appear to best fit your needs.

- Evaluate product cost, vendor experience and viability, vendor support for the product, external interfaces that will enable extension and integration, and compliance with any technology requirements or constraints for your environment. Cost will be a selection factor, but evaluate the candidates initially without considering their cost.

You might consider which requirements are not met by any of the candidate packages and will require you to develop extensions. These can add significant costs to the COTS implementation and should be considered in the evaluation process.

Recently, my organization wanted to select a requirements management tool that—among other capabilities—allowed users to work offline and synchronize to the master version of the requirements when the users went back online. We suspected that no tools on the market would offer a good solution for this. We included this capability in our evaluation to ensure that we uncovered any solutions that did offer it. If we didn't find one, we would know that it was a capability we'd have to implement as an extension to the selected package. Alternatively, we'd need to change our process for editing requirements.

Another evaluation approach is to determine whether—and how well—the package will let the users perform their tasks by deriving tests from the high-priority use cases. Include tests that explore how the system handles significant exception conditions that might arise. Walk through those tests to see how the candidate packages handle them. A similar approach is to run the COTS product through a suite of scenarios that represent the expected usage patterns, which is called an operational profile. Be sure to have at least one person whose involvement spans all of the evaluations you conduct. Otherwise, there is no assurance that comparable interpretations of the features and scores were used.

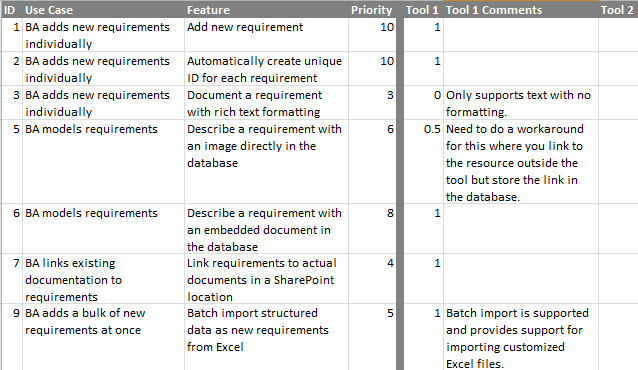

The output of the evaluation process is typically an evaluation matrix with the selection requirements in the rows and various solutions’ scores for each of those requirements in the columns. Figure 1 shows part of a sample evaluation matrix for a requirements management tool.

Figure 1. A sample of a packaged solution evaluation matrix for a requirements management tool.

When you have many potential packages to choose from, plan on conducting a multi-stage evaluation process. When I wrote the requirements for selecting a requirements management tool for our own consulting teams to use, I worked with the teams to identify the user classes and use cases for the tool. Although the primary users were business analysts, there were also a few use cases for managers, developers, and customers. I defined use cases by name and used my familiarity with the use cases to identify desired features. I created a traceability matrix to minimize the likelihood that any use cases or features would be missed.

We started with 200 features and 60 vendor choices, which were far too many for our evaluation timeline. We did a first-pass evaluation to eliminate most of the candidate tools. Our first pass considered only 30 features that we deemed the most important or most likely to distinguish tools from one another. This initial evaluation narrowed our search to 16 tool choices. Then we evaluated those 16 against the full set of 200 features. This detailed second-level evaluation resulted in a list of five closely-ranked tools, all of which would clearly meet our needs.

In addition to an objective analysis, it’s a good idea to evaluate candidate packages by using a real project, not just the tutorial project that comes with the product. We ended up adding a third level of evaluation to actually try each of those five tools on real projects so we could see which one most closely reflected the evaluation scores in practice. The third phase of the evaluation allowed us to select our favorite tool from the high-scoring ones.

Too often, the commercial software packages we select prove disappointing because they don’t meet our business needs in a way the users find acceptable. This can lead to expensive custom development needed to make the solution work for you. To avoid such unpleasant surprises, conduct a thorough evaluation before you write the vendor a hefty check.

Authors: Karl Wiegers & Joy Beatty

Karl Wiegers is Principal Consultant at Process Impact, www.processimpact.com. Joy Beatty is a Vice President at Seilevel, www.seilevel.com. Karl and Joy are co-authors of the award-winning book Software Requirements, 3rd Edition, from which this article is adapted. Karl’s most recent book is Successful Business Analysis Consulting: Strategies and Tips for Going It Alone.