Purpose

I’ve written this article to provide an overview of AWS (Amazon Web Services) for Business Analysts.

The cloud (in particular AWS) is now a part of many projects. If you’ve been in meetings where people have mentioned: ‘EC2’, ‘ELB’, ‘AZs’ and thought ‘WTF’ then this article should help you.

This article will provide:

- an overview of AWS (what is it, why its popular, how it’s used)

- typical AWS architecture for a project (e.g. VPN, Regions, AZs)

- cheat sheet for other key terms (EBS, EFS etc)

1. Overview of AWS

What is AWS

AWS is the most popular cloud platform in the world. It’s owned by Amazon & is almost as large as the next 2 cloud providers combined (Google Cloud + Microsoft’s Azure).

In a nutshell – AWS allows companies to use on-demand cloud computing from Amazon. Customers can easily access servers, storage, databases and a huge set of application services using a pay-as-you-go model.

Key Point: AWS is a cloud platform (owned by Amazon) used by companies to host and manage services in the cloud.

Why companies use it

Historically companies have owned their own IT infrastructure (e.g. servers / routers / storage). This has an overhead in terms of maintenance. It meant companies had to pay large amounts of money to own their infrastructure – even if that infrastructure was barely used certain times (e.g. at 3am). Companies also struggled to ramp up the infrastructure if demand suddenly went up (e.g. viral video on a website).

AWS & the cloud in general helps companies with that situation. It has 5 main benefits:

- Pay for what you use

- Scale the infrastructure to meet the demand

- Resiliency (if one data centre goes down, your service can use another)

- Cheaper (leverages the purchasing scale of Amazon)

- Removes the need to own and manage your own data centres

Key Point: AWS allows companies to only pay for the infrastructure they use. It also allows companies to quickly ramp up & ramp down infrastructure depending on demand.

How companies use it

There are 3 main cloud computing models. Most companies use IaaS:

- Infrastructure as a Service (IaaS) – provides access to networking features, computers (virtual or dedicated hardware) and data storage. This provides the greatest flexibility as you control the software / IT resources. With this model you get the kit but you manage it

- Platform as a Service (PaaS) – removes the need for your organisation to manage the infrastructure (hardware and operating systems). You don’t have to worry about software updates, resource procurement & capacity planning. With this model there’s even less to do – you just deploy / manage your own application (e.g. your website code)

- Software as a Service (SaaS) – provides you with a product that is run and managed by AWS. In this model you don’t need to worry about the infrastructure OR the service

If Amazon provides a suitable managed service, then it's often cheaper to use PaaS rather than IaaS - because you don't need to build and manage the service yourself.

A note about cloud deployment models …. broadly speaking there’s two models & most companies operate as Hybrid:

- Cloud = application is fully deployed in the cloud. All parts of the application run in the cloud

- Hybrid = connects infrastructure & applications between cloud-based resources and non-cloud based resources. This is typically used when legacy resources were built on-premises & it’s too complex to move them to the cloud - or because the company doesn’t want certain information in the cloud (e.g. privileged customer information)

Key Point: Most companies use AWS to provision infrastructure (IaaS). Amazon also offer PaaS and SaaS. PaaS means Amazon manage the platform (e.g. hardware / OS). SaaS means Amazon provides the product / service as well as the infrastructure.

2. Typical AWS Architecture

Region / Availability Zone

AWS has multiple Regions around the world. A Region is a geographic location (e.g. London, Ireland). You will typically deploy your application to one Region (e.g. London).

An Availability Zone is a data centre. A Region will have multiple Availability Zones. This means if one Availability Zone (AZ) fails, the other one(s) will keep running so you have resiliency. If you deploy to the London region – you will be in 3 AZs.

Key Point: Your application is likely to be hosted in 1 Region (London). Across 3 Availability Zones.

VPC / subnet

A VPC (Virtual Private Cloud) is your own chunk of the cloud. It allows you to create your own network in the cloud. Essentially a VPC is a subsection of the cloud – allowing you more control. You control what traffic goes in and out of the network.

A VPC sits at the region level. You can leverage any of the Availability Zones to create your virtual machines (e.g. EC2 instances) and other services. Within a VPC you can create many subnets – which are isolated parts of the network. Subnets are just a way to divide up your VPC and exist at the AZ level. You can have public or private subnets (or both).

The main AWS Services inside a VPC are: EC2, RDS, ELB. Although most things can now sit in a VPC.

Key Point: You’ll likely have 1 VPC (Virtual Private Cloud) in London & it will span all 3 AZs. A VPC gives your company an isolated part of AWS. You will create subnets to break-up the VPC into smaller chunks.

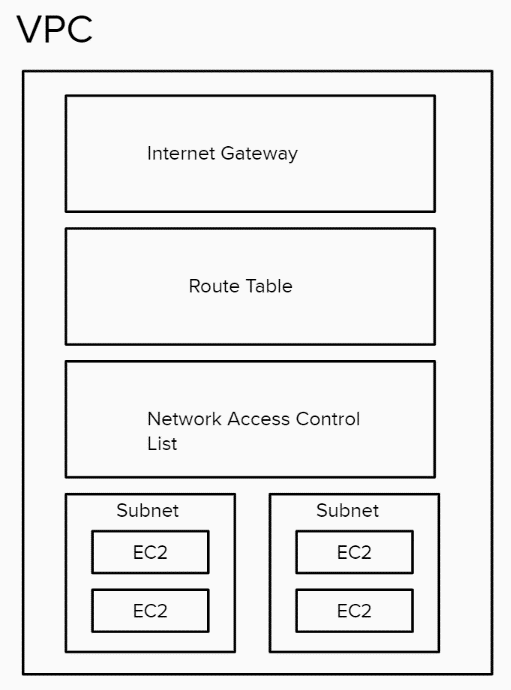

Internet Gateway = configures incoming and outgoing traffic to your VPC. It’s attached to the VPC & allows it to communicate with the Internet.

Route Table = Each VPC has a route table which makes the routing decision. Used to determine where network traffic is directed.

NACL = Acts as a firewall at the subnet level. Controls traffic coming in and out of a subnet. You can associate multiple subnets with a single NACL. There are 2 levels of firewall in a VPC: Network access control list (NACL) = at a subnet level. Security group = At an EC2 instance level.

Subnet = a subnetwork inside a VPC. It exists in 1 AZ. You can assign it an IP range & it allows you to control access to resources (e.g. you could create a private subnet for a DB and ensure its only accessible by the VPC).

NAT (not represented in the diagram) = Network address translation. NATs are devices which sit on the public subnet and can talk to the Internet on behalf of EC2 which are on private instances.

Every VPC comes with a private IP address range which is called CIDR (classless inter-domain routing). A VPC comes with a default local router that routes the traffic within a VPC.

3. Key AWS Concepts

EC2 / EBS / AMI – server, storage, machine image

EC2 = Elastic Compute Cloud. It’s a virtual machine in the cloud. You can run applications on it. It’s a bit like having a computer. It’s at an AZ level. You install an image on the EC2 instance (e.g. Windows or Linux) & chose the size (CPU / memory / storage). Storage is not persisted on an EC2 (e.g. if you delete an EC2 instance the storage is lost), so you will need EBS.

EBS = Elastic Block Storage. It’s like a hard drive & is local to an EC2 instance. This means it’s at an AZ level. You use it for storing things like the EC2 Operating System. It behaves like a raw, unformatted block device & is used for persistent storage.

Some other storage options in AWS include:

- S3 (Simple Storage Service) = Object Storage. Essentially a bucket where you can store things – S3 can be accessed over the internet. S3 is flat storage (there’s no hierarchy). It offers unlimited storage. Used for uploading and sharing files like images/videos, log files & data backups etc

- EFS (Elastic File System) = File Storage. It’s shared between EC2 instances. It allows a hierarchical structure. It’s at a region level and can be accessed across multiple AZs. Used for web serving, data analytics etc

AMI = Amazon Machine Image. A template that contains the software configuration (e.g. OS, application, server) required to launch your EC2 instance.

Key Point: You will spin up EC2 instances on your subnets. EC2 instances are like computers (with OS, CPU, memory storage) & you can run your application on them. EBS is storage attached to an EC2 instance. AMI is a template for launching EC2 instances.

ELB, Autoscaling & CloudWatch – load balancing, scaling, monitoring

Elastic Load Balancer (ELB) allows you to balance incoming traffic across multiple EC2 instances. It allows you to route traffic across EC2 instances so that they’re not overwhelmed.

Autoscaling adds capacity on the fly to ELB. Autoscaling increases or decreases the number of EC2 instances based on a scaling policy. Autoscaling will increase instances when a threshold value is exceeded and remove instances when they are not being utilised.

Cloudwatch is a monitoring service. It monitors the health of resources and applications. If an action is to be taken it will trigger the appropriate resources via alarms. Cloudwatch triggers the autoscaling.

Key Point: Elastic Load Balancer (ELB) distributes traffic across your existing EC2 instances. Cloudwatch monitors the service & triggers autoscaling. Autoscaling will perform scaling up or down of EC2 instances.

IAM – access management

IAM = Identity and Access Management. This is where you manage access to AWS resources (e.g. an S3 bucket) & the actions that can be performed (e.g. create an S3 bucket). It’s commonly used to manage users, groups, IAM Access Policies & roles. You can use IAM roles for example to grant applications permissions to AWS resources.

IAM is set at a global level (above region level – essentially at an AWS account level).

Key Point: IAM is where you manage access to computing, storage, database & application services. You can decide what resources a user or application can access, and what actions they can perform.

ELK – analytics, data processing & visualisation

ELK = Elasticsearch + Logstash + Kibana. It’s often used to aggregate and analyse the logs from all your systems.

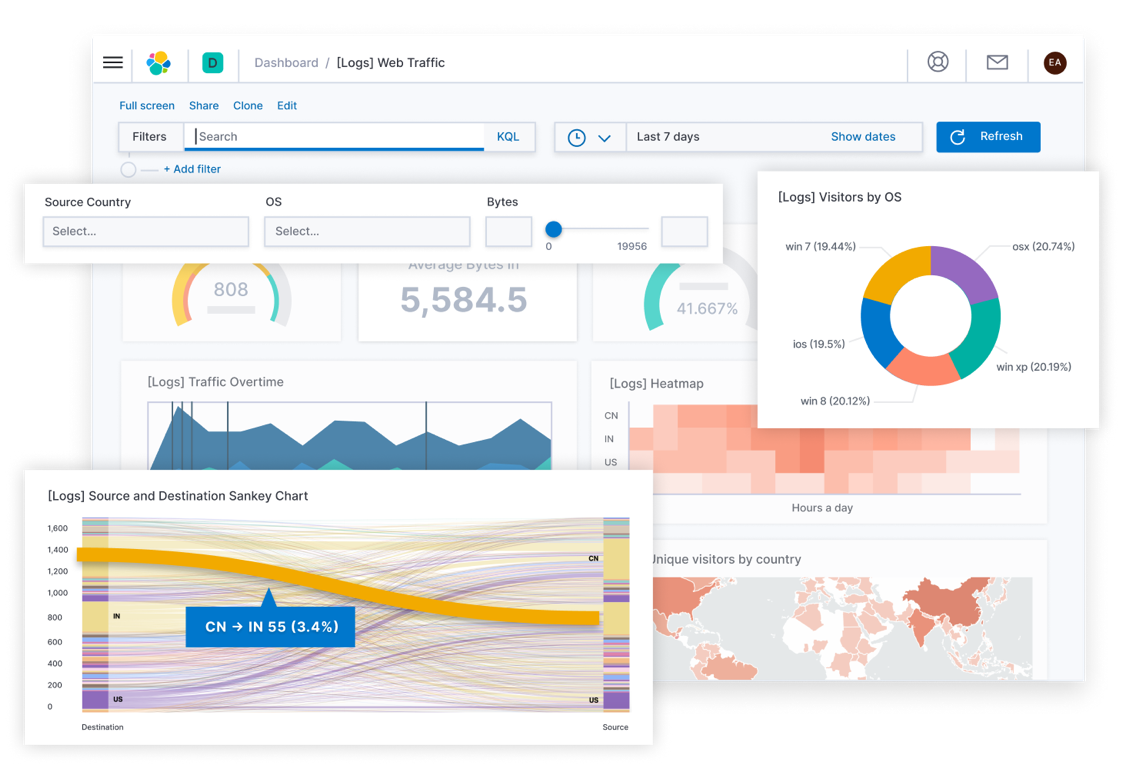

Elasticsearch is a search and analytics engine. Logstash is used for data processing; Logstash ingests data from multiple sources, transforms it & sends it to Elasticsearch. Kibana lets you view data with charts and graphs. Here’s an example from Kibana:

Elastic Stack is the next evolution of ELK. It includes Beats:

- Beats = lightweight, single purpose data shippers. Sits on your server and sends data to Logstash or Elasticsearch

- Example Beats include: Filebeat (ships logs and other data), Metricbeat (ships metric data), Packetbeat (ships network data)

As a note - there is an Amazon-managed elastic service called 'Amazon OpenSearch Service'.

Key Point: ELK lets you analyse logs and visualise them on a dashboard. You can see errors, volumes, performance (& more) for your service. Elastic Stack is ELK + Beats (data shippers).

4. Example AWS Implementations

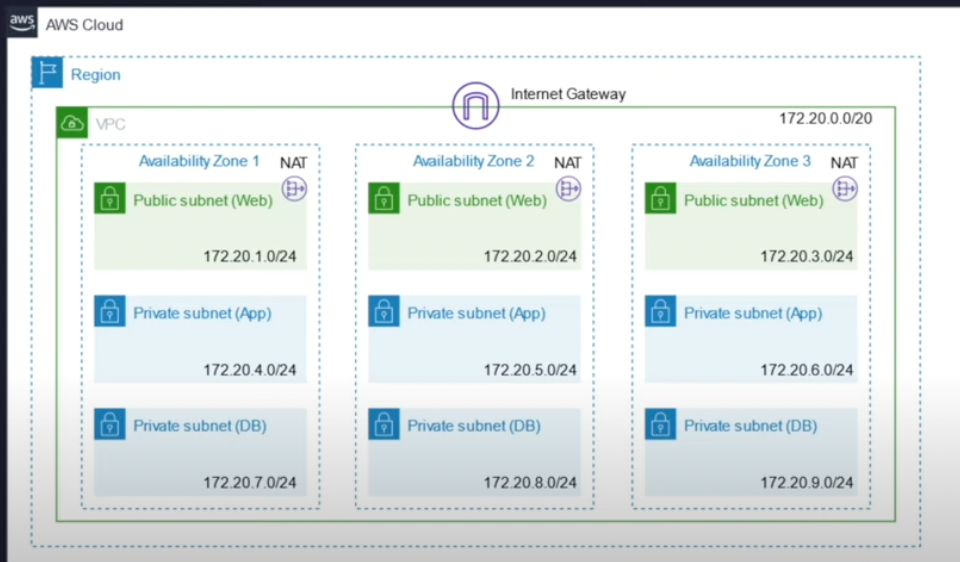

#1 Simple example -VPC in 1 region, 3 AZs, with multiple subnets

Here we have a VPC spanning 3 AZs. This VPC could be in the London Region.

To segment the VPC into smaller networks – they have setup private and public subnets. Each subnet is likely to have EC2 instances / DB instances in them.

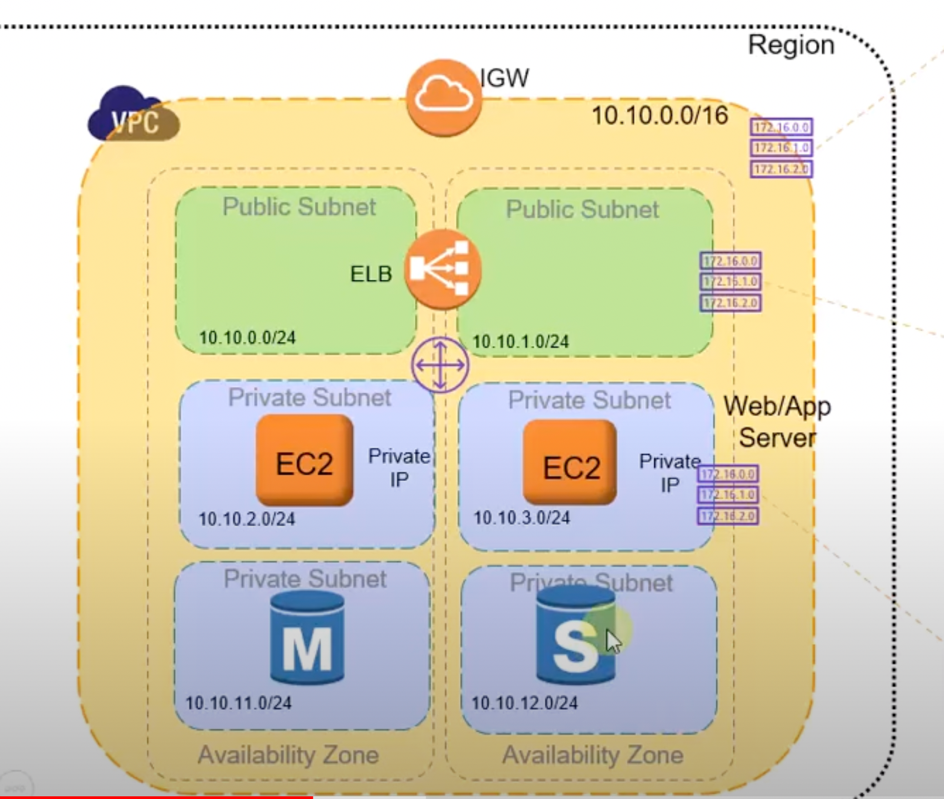

#2 Detailed example - VPC in 1 region, 2 AZs, with multiple subnets (public and private)

In this example you have a VPC in 1 Region across 2 AZs. You can see that they’ve setup public subnets (to connect to the Internet) and private subnets (for EC2 instances and to host a DB with private information). The IGW (Internet Gateway) is attached to the VPC; the Internet Gateway is controlling incoming & outgoing traffic and allows the VPC to communicate with the Internet.

There is an Elastic Load Balancer (ELB) which is being used to balance incoming traffic across EC2 instances – so that the EC2 instances are not overwhelmed. It’s not shown here – but they may also be using Cloudwatch and Autoscaling to increase / decrease the number of EC2 instances depending on traffic.

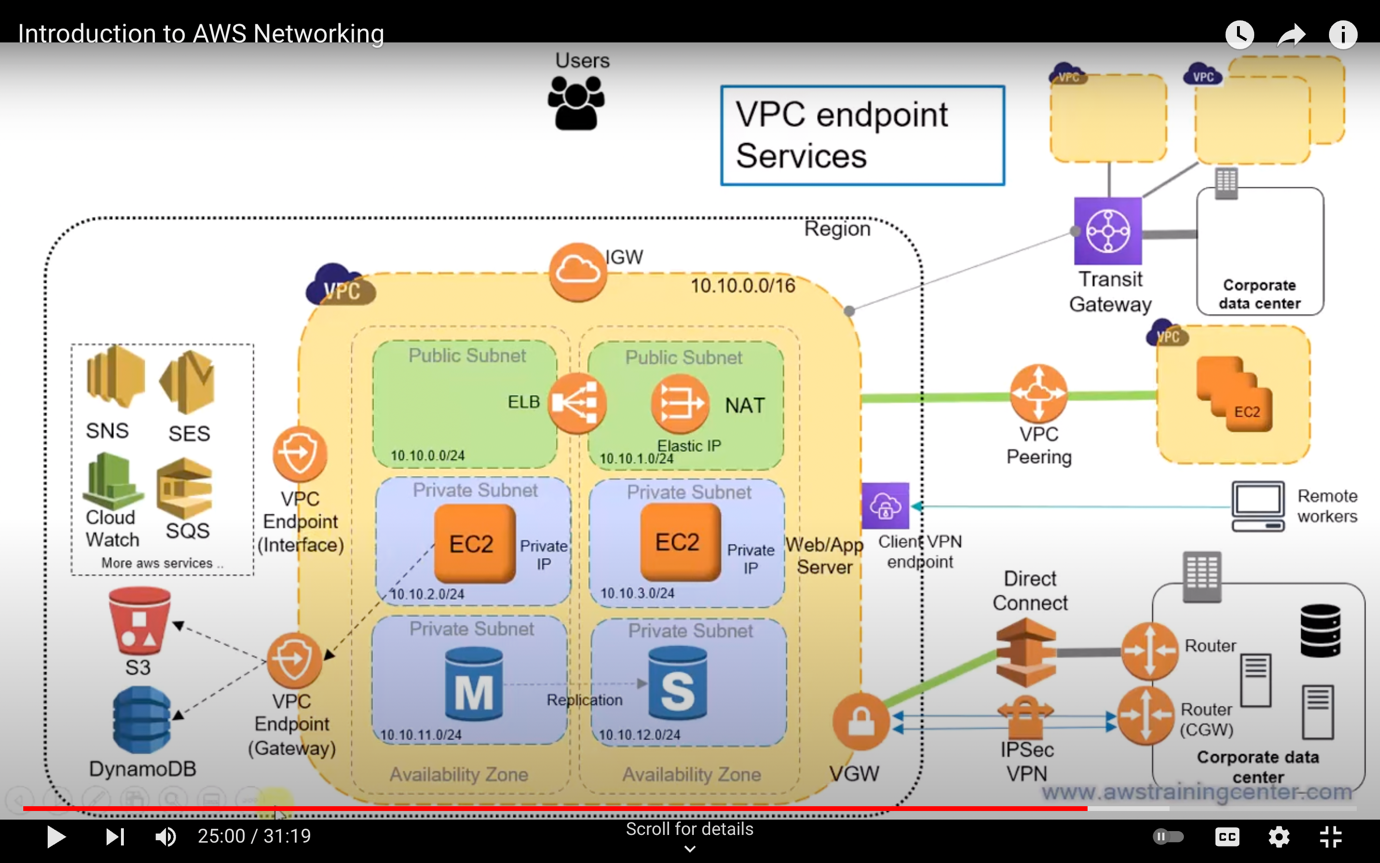

#3 Complex example - Multiple VPCs, VPC peering, transit gateway, VPN tunnels and direct connects

Looking at the right hand side of the image. In this design there are multiple VPCs.

One big application may be across multiple VPCs. VPC peering allows one VPC to talk to another using a dedicated and private network. They can be in the same AWS region or a different AWS region. It means you don’t have to talk over public internet but via AWS managed connectivity. HOWEVER this is VPC-to-VPC and if you have many VPCs this becomes complex because its 1:1 connection between VPCs.

If you want to connect hundreds of VPCs you can use a transit gateway. With this design all VPCs connect to a transit gateway + the transit gateway can connect to any VPC (it acts like a hub).

There is a 3rd way to connect a VPC to another VPC – if you don’t want to expose all the machines in one VPC (e.g. if its a SAAS product). It’s not represented in this diagram but if you only want to expose 1 service you can use “private link”. Which allows the Network load balancer of one VPC to connect to the VPC Endpoint Interface.

Finally – in the bottom right you can see a Virtual Private Gateway. This allows your VPC to connect to your on-prem network or your on-prem data centre. It can enable connectivity using VPN tunnels or a dedicated connection called AWS direct connect (the latter gives more bandwidth reliability). Essentially its used for hybrid connectivity – where some of your workloads are on premise & some are in AWS.

5. Conclusions

The aim of this article was to provide an overview of a very technical area (AWS).

I haven’t listed all of the AWS technologies (e.g. Kubernetes, containers, serverless). If you’re interested in learning more about AWS I’d recommend:

I hope you find this useful. I’d like to thank Matt Clark (Head of Architecture for the BBC’s Digital Projects) & Gavin Campbell (DevOps Engineer at Equal Experts) for providing feedback on the article.

Author: Ryan Hewitt, Senior Business Analyst

Author: Ryan Hewitt, Senior Business Analyst

Ryan Thomas Hewitt has over 5 years business analyst experience working for blue chip companies in India, Germany, USA, Italy and UK. Ryan holds certifications in scrum, lean, ITIL, change management and NLP.