Every area of practice in IT has a set of specific “tools” that supports the standard work of technology professionals. Data analysis is the capturing of data requirements, developing models that reflect those requirements and creating a design to store the data. You can accomplish this with a pencil, paper, and the right skill-set. But it can be done much more quickly and consistently if the process is automated.

There are hundreds of individual software tools and tool-suites that support different facets of data analysis. When Modern Analyst asked me to prepare an article on data analysis tools, I decided to provide a list of questions that I’ve found useful in filtering the requirements of a tool selection exercise. The alternative, providing a laundry list of tools, did not seem as helpful as equipping you to make your own decision. I’ll also provide references to other locations on the internet that I’ve used when searching for tools myself.

In order to organize this list of questions I thought about how I accomplish data analysis. Most engagements for me fall into a consistent workflow. First I need to listen and to collaborate with a person or group providing requirements. Next I document those requirements a combination of text and picture to refine and reflect back the understanding of the business needs for information. Presenting back work products can be necessary for a variety of reasons: executive overview, validation by the business user, and peer-review. Finally, the deliverables need to have sign-off and be passed on to the resources that will take the design and create the physical structures and implement the application.

Because communication and collaboration are critical, the best choice is one that is consistent with any existing standards or tools being used in a company. I believe that even if there are new tools with fancy functionality, being able to share work products easily between groups is a high priority item.

If there is no current investment in data management tools, going through a request-for-information (RFI) is a great approach to finding the right solution for your needs. Treat this as you would any commercial-off-the-shelf (COTS) package evaluation. Ask about the vendor’s company, the size of the user base for the tool, the technical platform on which it was developed, the support staff, the release strategy, and all of the other questions that typically come into play with a software purchase. Make sure that it fits in the technical architecture that your firm has established. For the specific functionality around data analysis, I suggest that you include the following categories of questions.

ILLUSTRATE

► Can the tool draw an Entity-Relationship (ER) Diagram easily?

The technique of creating a picture that includes lines, boxes, and connectors is the bread-and-butter of data modeling. The tool should allow the data analyst to move objects around the diagram easily.

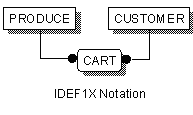

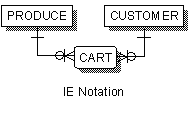

► Can it switch between different notation styles?

The pictures and the objects that make up the picture can follow many different standards. Depending on the structure of your department and the standards that are used by your company, one notation may be a higher priority than another. Over time, there is huge value in the ability to switch notations. Analysts from different backgrounds can review models with equally clear understanding of the meaning and purpose of the structures reducing the learning curve of bringing on new data modeling staff.

► Can it draw both Relational and Dimensional style ER diagrams?

In recent years, different techniques have been developed for modeling in on-line transaction processing (OLTP) databases and data mining oriented on-line analytical processing (OLAP) databases. Star-schema designs require different physical orientations for the data structures in an entity-relationship diagram. It is useful to have a tool that is able to display using either approach.

► Can it represent Conceptual Data Model (CDM), Logical Data Model (LDM), and Physical Data Model (PDM) levels of diagram?

Different levels of model are more appropriate for each type of audience. During a scoping exercise, executives and business users tend to be more comfortable with the CDM. Actual data requirements and business rules can typically be best displayed in a LDM. Database Analysts, Quality Assurance, Development and Production Support staff frequently refer to the PDM, since it is a visual representation of the actual tables, views, columns and indexes that are directly accessed through the application systems.

► Is the diagram easily transportable to other tools in multiple formats?

A picture can be worth a thousand words. A well structured diagram can be in user manuals, online help, in test-case documentation or even sales literature.

► Can the diagram be enhanced with colors, fonts, non-model text, and/or graphics?

During development, when the picture is actively being used to illustrate new or changed structures, color and font can bring attention to the appropriate portion of a model. After implementing system, annotating the picture to describe the structures or special situations makes the data model diagram a valuable tool while the structures are in active use.

► Can the tool represent graphically an existing system through an automated mechanism?

In some programs there is as much existing system enhancement as new system development. Deriving a model of the existing data structures is a critical success factor in maintenance projects. For others, comparing the documentation to the actual physical implementation ensures that modifications are implemented properly. Many tools offer support in automating this comparison, saving time and reducing the opportunity for manual errors.

DOCUMENT

► Can the tool generate documentation about a data design?

A picture may be worth a thousand words, but a picture without words will become unusable! And data without a name is completely useless…

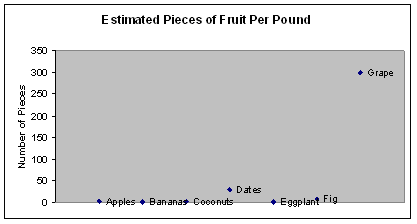

... means less than

| |

Estimated Pieces per Pound |

| Apples |

4

|

| Bananas |

2

|

| Coconuts |

1

|

| Dates |

30

|

| Eggplant |

1

|

| Fig |

8

|

| Grapes |

300

|

Information that describes the meaning, purpose, and details of a data structure is crucial to consistent understanding. Documentation created during a project has to be easily available to anyone using that data structure in the future. You should include any assumptions on which the data design was based. If one of the assumptions changes because of a shift in the business environment, this impact can be more easily assessed at a logical level.

► Is the data definition information, metadata, rich enough to completely describe data objects?

There are basic pieces of information that are captured in most data modeling tools, name, definition, attributes, data type, and optionality come immediately to mind. But some projects require more; they may need data stewardship, ownership or privacy classification characteristics that are not typically ‘out-of-the-box’. The assessment should identify whether the tool is expandable, allowing you to define new characteristics as required.

► Can business rules be documented easily in a structured way?

Most requirements for data are surrounded and bounded by business constraints. Having those constraints associated directly to data objects and relationships in a model can provide a two-fold benefit. Firstly, it can help describe access and update logic around the information. Secondly, it can be the basis from which to build both test cases and a standardized set of test data.

► Can the tool accept bulk text updates from other sources?

Frequently, tools that support data analysis and design are built with only one type of input mechanism…a human in front of a screen. Metadata management tools that have the capacity to load definitional information through an automated mechanism have a distinct advantage.

► Does it have the ability to generate metadata reports for a variety of delivery mechanisms (Wiki, .xml, .rtf, .PDF or .xls for example)?

The main goal of any data design and model should be to communicate, and different audiences may need the information in different formats.

RESEARCH

► Can your modeling tool allow access directly to the data?

This ability is rare in a modeling tool, more frequently data inspection is done through data access tools or data profiling tools.

► Can the tool allow access to multiple data sources through the same interface?

Many projects require data input and outputs to be on different technology platforms. For example a data warehouse may gather information from Oracle, DB2, Sybase, Informix, and flat files, and then undergo a match/merge process through an extract/transform/load (ETL) tool for final storage in a data mart on a completely different technology. Having to use multiple tools with a variety of interfaces to all of these sources in the same project would require you to have a much wider range of knowledge than having a single tool with the ability to connect to multiple sources.

► Can data inspection, to validate assumptions, be performed with a minimum of technical knowledge of the physical implementation platform?

Some Business Analysts understand how to code native SQL. If that is not your strength, look for a tool with a more automated GUI which generates the SQL in the background. The best tools support the modification of that generated SQL to perform the most complex inspection scenarios.

► Can data be analyzed using statistics?

Data quality can have an enormous impact on the type of business rules and coding requirements. If you have the ability to scan and assess sets of data to identify data issues during discovery, you will save that time and more during the requirements definition.

► Can the results of the analysis be delivered in more than one format?

Outlier data can be more easily identified through a graph:

But sometimes you need the metadata to decide whether the outlier is valid or an indication of a data quality issue:

PRESENT

► Can the diagram and text documentation be combined into a well-formed deliverable that can be structured for audiences at multiple levels?

At the end of the data analysis and design phases, a final deliverable should be generated in an appropriate format for each audience that it needs to inform. A tool that allows the generation of a document will allow you more time to focus on the content than the presentation. Typically, the executive sponsor does not need to see the physical table design, but the database administrator (DBA) does.

► Can sample data be associated easily with design deliverables?

A good set of data that includes examples of correct values as well as error situations can enhance your ability to explain the details for both business user and technology professional.

FOR ALL TOOLS

► Is the tool available for multiple simultaneous users on the same work product (for large project support)?

► Can the work-product be leveled into multiple subsections, and then if worked on in the subsection, is the work reflected in the overall picture?

► Can the work-product be versioned in the tool? Can you roll-back easily to a previous version?

► Is training easily available? Can it be delivered online on-demand?

No single tool is the best; but given your environment, there might be a tool or set of tools that is the best-fit for you and your company.

Resources:

- Common Data Modeling Notations - A comparison chart by Scott Ambler of different data modeling notations.

- The Data Administration Newsletter (TDAN) - Products and Companies – The section on Product and Companies of a newsletter published by Robert S. Seiner that is one of the most recognized sources of information about data management topics on the web.

- DMReview – a magazine, available both in print and on-line delivers market-leading insight through interviews, articles and columns written by the best consultants, hands-on practitioners and technology solution leaders the industry has to offer.

- Gartner and Forrester both provide a wealth of information useful for software selection. Check to see whether your company has service with one of them, or some other research services.

Author: Loretta Mahon Smith, CCP, CBIP, CDMP, is the Vice President for the Data Management Association - National Capitol Region, a ICCP Certification Counsel Member. She is a 25 year career Data Architect in the financial services industry.