Pretty often in articles and conference talks I hear how people solve their waterfall problems implementing agile - scrum, XP, kanban, all the buzzwords, you name them.

Being an advocate for adaptive approaches in development myself, I still often struggle to understand the rationale behind many transitions to what people call "agile" in those instances. Taking the popular frameworks, people applied the processes from them without any cultural or broader organizational changes. An extreme example of that was when a person in a conference was talking about how glad they were that they turned into

- it became so easy for them to develop things in small predictable iterations, so they can deliver things on time. When I asked, how they manage their stories so they are independent and support for re prioritization, they said - "we don't, priorities and the order of development never change after the scope is defined". So for my next (I admit, a bit provocative) question - "where is agile then if you don't cater for potential changes?" they said "how can't you see it - we do iterations and the rituals" and then sent me to read the manual and not waste their time asking incompetent questions : )

I have been thinking quite a lot about such transitions in the recent time. Obviously, when implementing some

practices, the organizations do not become agile straight away. However, implementing true agile culture doesn't seem to be the goal in some cases. This contradicts to what is stated in I guess most if not all of the agile guides and manuals (as in "implement the whole thing, half measures won't bring the value", e.g. in [1], which is a nice reading by the way), still companies go for it and are surprisingly happy!

So I came to a conclusion that I found interesting and want to share with the public: when doing this transition, the companies do not want to implement agile, they just want to run away from

. And running away from waterfall can come in many shapes and forms, so the overall popular idea of comparing “waterfall” vs “agile” as two competing extremes is not conceptually correct.

The history and mystery of waterfall

When referring to waterfall, we always imagine a picture of 5 or 7 squares, one after another, where each square represents a stage of a software development process. It is assumed that if a stage is over we do not return back; at the same time the process does not proceed further if the previous stage is not complete. As an alternative to that people talk about agile (e.g. scrum as one of the most popular frameworks) where the notion of stages is questioned. I feel like this comparison is not accurate in its essence.

Let's have a look at the reasoning behind selecting a more or less agile approach on projects. In the end of the day, managing a project is mostly about managing uncertainty (or risks) on the way to delivering the final result. With this regards, people can select different strategy: some projects require to predict as much as possible, having a cookbook of recipes what to do in case any situation occurs and leaving a small room to manoeuvre as the project goes (typically called predictive approaches), some tend to solve issues and pivot as the need arises and be prepared for that (adaptive approaches). So, in predictive paradigm people try to forecast all possible outcomes and have a plan for each. In adaptive approach they say it is impossible or not feasible to do so, so they rather include re-assessment and re-planning as natural part of the processes. The degree to which a company tends to be adaptive can differ between organizations, industries, or cultures.

This means, that instead of having just two extremes waterfall vs agile, we have a whole continuum of variations on the line between predictive and adaptive. The more one tends to forecast and predict the more predictive framework they would tend to choose.

Figure 1. Continuum of approaches with regards to managing uncertainty.

There are best practices and frameworks that correspond to different segments of the range. The question is where does typical "waterfall" sit in this continuum? Providing we are talking about "best practices" and "established frameworks", my answer is “nowhere”.

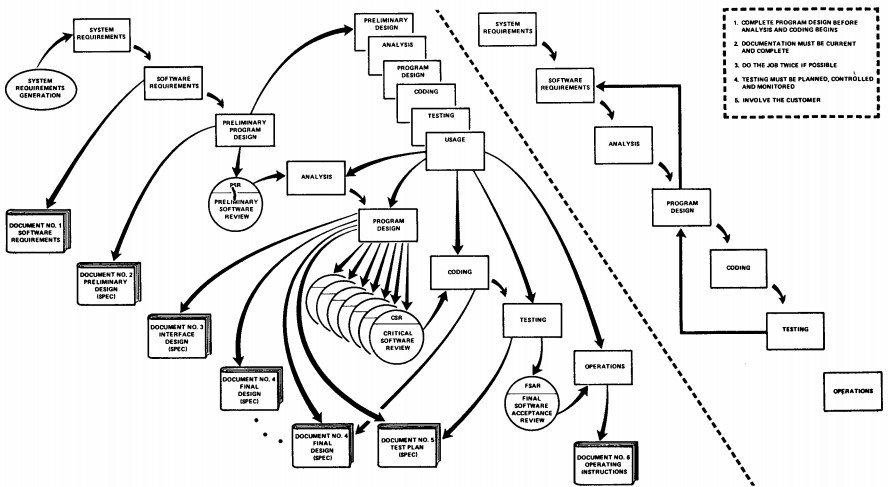

It will be less surprising if we go back to the very beginning. In 1970 Dr. Winston W. Royce published his famous article called "Managing the development of large software systems", which is considered to be the beginning of the "Waterfall" era.

Figure 2 - Typical waterfall as described by Dr Royce. [2]

If you have ever seen the above picture before (and you probably have many times), you need to know that it really originates from the Dr Royce’s article. However, this is how the author talks about the approach illustrated above:

"<…> the implementation described above is risky and invites failure.”

And what is more interesting:

"The required design changes are likely to be so disruptive that the software requirements upon which the design is based and which provides the rationale for everything are violated. <...> In effect the development process has returned to the origin and one can expect up to a l00-percent overrun in schedule and/or costs."

So what does Dr Royce, also known as the father of waterfall, suggest?

- First, a preliminary program design phase has to be inserted between the software requirements generation and analysis phase to assure that the software will not fail because of storage, timing, and data flux reasons (i.e. assess feasibility of the solution), then the design should be documented.

- Next recommendation is to do it at least twice! Build a prototype first, test it, then iterate on it (sic!).

- Third, he talks about managing and controlling the tests, especially highlighting that "many parts of the test process are best handled by test specialists who did not necessarily contribute to the original design" which is a consideration that has to addressed on a project.

- Last but not least, he says that one has to involve the customer. I'll quote again: "Important to involve the customer in a formal way so that he has committed himself at earlier points before final delivery."

So a proper waterfall project will look something like this:

Figure 3 - A proper predictive project methodology as compared to impaired waterfall as described by Dr Royce [2]

Not the first thing that comes to our minds when hearing “waterfall”, right?

Let's compare these recommendations with typical "Waterfall problems", solutions to which are sought in agile? Just a couple of highlights from different sources:

- "Often, designs that look feasible on paper turn out to be expensive or difficult in practice, requiring a re-design and hence destroying the clear distinctions between phases of the traditional waterfall model."[3]

- "The most prominent criticism revolves around the fact that very often, customers don't really know what they want up-front; rather, what they want emerges out of repeated two-way interactions over the course of the project."[3]

- "Once an application is in the testing stage, it is very difficult to go back and change something that was not well-thought out in the concept stage."[4]

- "Very less customer interaction is involved during the development of the product. Once the product is ready then only it can be demoed to the end users. Once the product is developed and if any failure occurs then the cost of fixing such issues are very high, because we need to update everywhere from document till the logic."[4]

The list can go on and on. Does it sound familiar? Yes. Is it something that was not considered in the Royce's article? I doubt so.

There are of course typical aspects of predictive development that may be annoying or appear counter-productive as compared to adaptive development, but let's leave them aside for now. My point here is that many of what people call core problems associated with "waterfall" development already had the means to get resolved in the very first article formalizing a predictive software development.

Now let us think about this: there were about 50 years of thinking between the date that article was published and now. Of course the management frameworks developed and got more sophisticated and adjusted to the modern world. So why do we still talk about all the same problems Dr Royce talked, such as not feasible products get designed, customers are not involved, quality is not considered during design and development? So when the people implement agile processes they get these issues resolved even without actually becoming much more adaptive in nature!

The answer is obvious and the answer is very sad. What companies face is bad project management practices, and the way they solve them is implementing new processes; which does not actually have a goal to transform from being predictive to being adaptive. They feel confident in the left side of the uncertainty management continuum, which is fine. But they suffer from not implementing proper practices dictated by their position in the paradigm. Agile is a buzzword now, so when a company realizes they lack project management culture, the first thing that comes to mind is: "we need to go agile". With most of the agile processes, that preach the culture of shared responsibility and total involvement, it is easier to pick up the project management flaws, which eventually results in better projects. It makes the processes less ad-hoc, which brings value.

Let's be honest, it is incredibly hard to implement a huge predictive project; the pressure on the project management is high. When these responsibilities are partially spread across the team, are partially replaced by other processes, and are partially revisited during training sessions as a part of a transformation, the organization will definitely see improvements.

Why is it important?

I am still not sure if the thinking above is correct or not, but it explains some of the "agile transformations" without actual implementation of an adaptive mindset that I've seen.

Please don't get me wrong, I'm not saying that implementing agile frameworks is not needed and we all should go back in time and uncase all the heavy methodologies we used to have.

I see it this way. When having an illness, we always want to understand the root cause, and not treat just the symptoms. In most cases the treatment won't change when we understand the causes, but sometimes it will. Same goes with organizations.

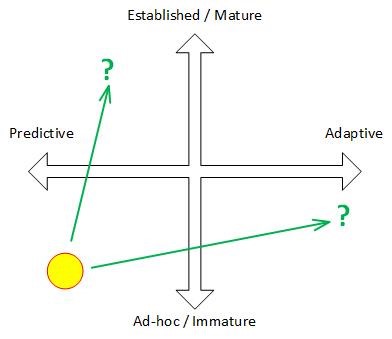

It is better to understand the "why" behind approaching an agile transformation - is it because the organization wants to move to the right in the predictive-adaptive range, or because the organization lacks quality and best practices within the current paradigm? If we put it on a chart, it will translate to a question: does the organization want to move along the horizontal or vertical axis? See figure below.

Figure 4. Potential paths from “waterfall” position

If a business faces problems and unsatisfactory results, it is a time for a change. For defining a proper change strategy, a company needs to understand the context of a change and the core needs that trigger it (refer to Core BA concept model in [5]). We as business analysts should understand it better than others.

Understanding the reasoning behind the change will help implement it and reduce some unnecessary overhead that a company might have. It is always better to call a spade a spade, it avoids confusion and subsequent confusing conference talks : )

Author: Igor Arkhipov, CBAP.

Igor holds Master of Business Informatics degree specializing in Business Process Modeling and Optimization. Igor has broad experience as a Business Analyst and a BA Team Manager in the fields of customer support&services, software development and e-commerce, currently acting as a Lead Business Analyst at Isobar Australia.

https://au.linkedin.com/in/igarkhipov

References:

- EXIN Agile Scrum Foundation Workbook- Nader K. Rad, Frank Turley, ASIN: B00OZTMD52

- Managing The Development Of Large Software Systems, Proceedings of IEEE WESCON, 26 (August): 1–9 - Royce, Winston (1970)

- http://www.techrepublic.com/article/understanding-the-pros-and-cons-of-the-waterfall-model-of-software-development/

- http://istqbexamcertification.com/what-is-waterfall-model-advantages-disadvantages-and-when-to-use-it/

- A Guide To The Business Analysis Body Of Knowledge v.3 - International Institute of Business Analysis, Toronto, Ontario, Canada (2015)