This article proposes a V-Model for agile development testing and invites feedback from the reader. Although the proposed model follows the same shape as the traditional testing V-Model developed nearly 3 decades ago, the modified V-Model presented here depicts the unique characteristics of agile development testing. The agile method used in this article is Scrum; the author assumes the reader is familiar with this solution development life cycle.

Traditional V-Model

The German Federal Ministry developed the traditional V-Model for testing in the 1980’s; see Figure 1. Named for “verification and validation”, the V-Model depicts the “waterfall” Solution Development Life Cycle (SDLC) approach to software testing.

-

Verification is the comparison of software to its technical specification. This effort is typically led by a systems analyst.

-

Validation is the comparison of software to its business requirements. This effort is typically led by a business analyst.

From the viewpoint of the reader, the left side of the traditional “V” portrays various upfront and comprehensive business and technical documents while the right side mirrors the corresponding levels of testing. Top-down, the testing levels are satisfaction assessment, acceptance testing, user testing, system testing, integration testing, and unit testing. The top 3 levels provide validation while the lower 3 verification.

Crossing the model from left to right, it shows the opportunity to plan the various testing levels prior to software coding; essentially the V-Model is a high-level form of Test-Driven Development (TDD) (1). TDD is a technique where tests are developed prior to writing software code. For example, prior to coding software, the business analyst can plan software validation by:

-

Constructing a satisfaction assessment survey from the business needs

-

Determining product owner acceptance criteria from features stated in the Product Vision

-

Developing user testing scenarios/cases from the use cases (or functional requirement declarations) and business rules contained in the Business Requirements Document

Although both validation and verification planning and development is possible prior to the writing of software code, traditional waterfall project teams typically wait (unfortunately) until after the software code is written to plan and develop tests.

While the traditional V-Model has proven very useful in reflecting testing using a waterfall SDLC, it certainly inadequately describes an agile SDLC. This is due to the realization that generating upfront comprehensive business requirements documentation is unrealistic for “wicked” projects. A wicked project is one where the scope either lacks specific definition or changes dynamically due to fact discovery or moving market conditions (2). In other words, the stakeholders only have a “notion” of what they want, and believe they will recognize “it” if and when they “see” it.

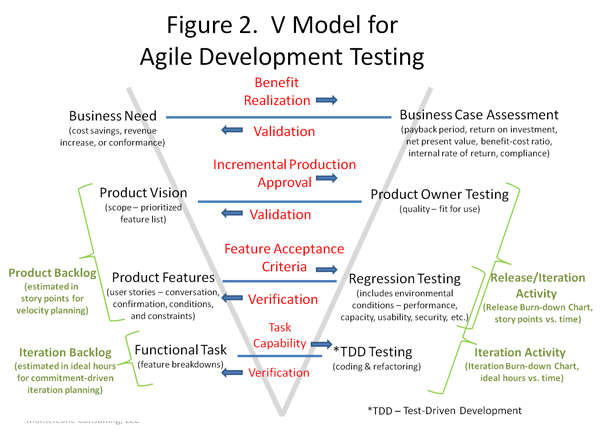

Proposed Agile V-Model

The V-Model for agile development testing depicts only 4 levels of testing with less formal documentation; see Figure 2. Note the model annotations use jargon from Scrum (i.e., Product and Iteration Backlogs as well as Iteration and Release Burn-Down Charts). Special Note: Testing is an iteration activity and part of the definition of “done”.

Left Side of the agile “V”

As in the traditional V-Model, on the left side of the agile “V” there is a top-down progression of high-level solution deliverables starting with the business need.

-

Business Need – states the business direction in the form of cost savings, revenue increase, conformance to laws, industrial standards, or an executive edict

-

With a statement of business need, the stakeholders scope the Product Vision of the solution by creating a list of prioritized software features. Led by a business analyst, the stakeholders attend a facilitation session where they brainstorm ideas, construct AS-IS and TO-BE models, conduct a gap analysis, and finally rank the product features of the software solution along with their benefits. Note the stakeholders most likely also identify associated process improvements.

-

Together with the stakeholders, the agile team elaborates on the Product Features via user stories consisting of conversations and matching test confirmations with constraints (business rules) and environmental conditions (e.g., system performance, capacity, usability, security, etc.) (3). The team then estimates the work required for each feature in story points for velocity planning. Velocity is the average of story points a team can address in an iteration (4).

-

For each iteration, the agile team breaks down selected product features into Functional Tasks (e.g., input, store, retrieve, calculate, transmit, print, etc.) and estimates the work required in hours. This is called commitment-driven iterative planning (4).

Right Side of the Agile “V”

As in the traditional V-Model, the right side of the “V” mirrors the progressive definition side with matching testing levels. Again starting at the top:

-

Business Case Assessment consists of economic indicators that confirm that benefits stated in the Business Need are realized.

These indicators range from payback period, benefit-cost ratio (BCR), return on investment (ROI), net present value (NPV), to internal rate of return (IRR). Note benefits need to be on a feature basis since the product owner will approve features incrementally (5). This allows the product owner to update the business case as soon as the benefits are realized. This is a real economic benefit of the agile approach; the quicker the features are moved to production, the higher the NPV. Note if the business case is motivated by a conformance issue, the benefits are in the compliance rather than economics.

In the traditional V-Model, the matching test level of Business Need is a satisfaction assessment rather than a business case assessment. The satisfaction assessment is typically a user survey providing solution feedback such as solution errors, enhancements and lessons learned. Due to the continuous involvement of the product users in agile, the satisfaction assessment is redundant.

-

Product Owner Testing is when upon reviewing completed features of the current release/iteration, the owner of the solution decides if the quality of the Product Vision features are “fit for use” for an incremental move to production (6). This is in contrast to the traditional V-Model where the quality definition of “conformance to written and approved requirements” is applied by the product owner using the formal Business Requirements Document (BRD).

-

Regression Testing, also known as continuous integration testing or automated testing, is a suite or series of testing to ensure all acceptance criteria for developed features are still working (no errors). This level of testing also includes solution environmental conditions as stated in the Product Features.

-

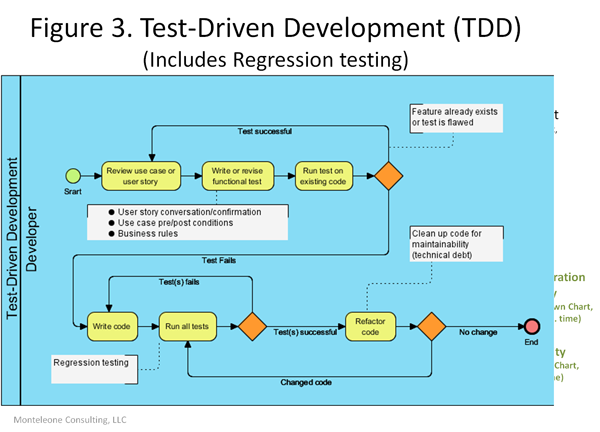

Test-Driven Development (TDD) is the 1) development of functional tests prior to coding, 2) coding, 3) test execution and 4) refactoring of the Functional Tasks; see the process model in Figure 3. Refactoring is “cleaning” up code for efficiency and/or maintainability (yes, documentation), not adding or changing tasks; sometimes referred to as technical debt.

Summary

Like the traditional V-Model, the proposed V-Model for agile development testing highlights both validation and verification. Although, by nature, the agile V-Model is simpler (fewer test levels), it is just as thorough. The agile V-Model maintains and truly enhances the test-driven development concept. And most important, it completes the test life cycle with the business case assessment. Your comments on this proposed model are welcomed.

Author: Mr. Monteleone holds a B.S. in physics and an M.S. in computing science from Texas A&M University. He is certified as a Project Management Professional (PMP®) by the Project Management Institute (PMI®), a Certified Business Analysis Professional (CBAP®) by the International Institute of Business Analysis (IIBA®), a Certified ScrumMaster (CSM) and Certified Scrum Product Owner (CSPO) by the Scrum Alliance, and certified in BPMN by BPMessentials. He holds an Advanced Master’s Certificate in Project Management (GWCPM®) and a Business Analyst Certification (GWCBA®) from George Washington University School of Business. Mark is the President of Monteleone Consulting, LLC and can be contacted via e-mail – [email protected].

References

1. Beck, Kent (2003), Test-Driven Development By Example, Addison Wesley

2. Monteleone, Mark (2010), The Tame, the Messy, and the Wicked, www.modernanalyst.com

3. Cohn, Mike (2004), User Stories Applied For Agile Software Development, Addison Wesley

4. Cohn, Mike (2005), Agile Estimating and Planning, Prentice Hall

5. Denne, Mark and Cleland-Huang, Jane (2004), Software by Numbers, Sun Microsystems

6. Schwaber, Ken and Beedle, Mike (2002), Agile Software Development with Scrum, Prentice Hall