Extreme Inspections are a low-cost, high-improvement way to assure specification quality, effectively teach good specification practice, and make informed decisions about the requirements specification process and its output, in any project. The method is not restricted to be used on requirements analysis related material; this article however is limited to requirements specification. It gives firsthand experience and hard data to support the above claim. Using an industry case study I conducted with one of my clients I will give information about the Extreme Inspection method - sufficient to understand what it is and why its use is almost mandatory, but not how to do it. I will also give evidence of its strengths and limitations, as well as recommendations for its use and other applications.

Introduction

Business analysts around the globe write requirements specifications in many different formats, be it a classical Market or Software Requirements Specification (MRS/SRS), a set of Use Cases or User Stories, or Technical Specification Sheets. In many organizations these documents' quality is assured one way or another. Peer or group reviews are very common to serve this purpose. Usually in practice the goal of such a QA step is finding defects in the specification, for once they're found they can be corrected. However, it is hopeless to use QA methods like classical reviews, walkthroughs or inspections [IEEE] on requirements specification documents (and most other system engineering artifacts), to this end. Common industry QA practice in the software business is based on the wrong assumption that we can find enough defects, so that after fixing them the respective specification is clean enough to be used without serious harm.

We already know that these methods are only about 40% effective, meaning they uncover only a third of all existing defects [Jones2008]. We also know that most specifications have 20 to 80 defects per page, even after an official 'QA passed' tag was put to them ([Gilb2008] and [Gotz2009]).

There is a smarter way. I'm not suggesting a new miraculous method with significantly higher effectiveness, but one with a different, yet realistic goal. We should use QA methods on software engineering artifacts for what they were designed for: to check the quality. Note how 'checking quality' is quite different from 'finding and correcting defects'. Based on a reliable check we are then able to make an informed decision for the future of the specification. Does it need further improvement or is it safe enough to use it as-is?

I'm going to describe a very low-cost, effective approach to arrive at the necessary data to make such a decision: Extreme Inspections. This method will also significantly reduce the defect injection rate of any responsible specification writer, and thereby serve as an excellent defect prevention tool. Defect prevention is the heart of any real QA [Gilb2009].

I'm going to describe a very low-cost, effective approach to arrive at the necessary data to make such a decision: Extreme Inspections. This method will also significantly reduce the defect injection rate of any responsible specification writer, and thereby serve as an excellent defect prevention tool. Defect prevention is the heart of any real QA [Gilb2009].

Proper credit to the invention of this powerful inspection variant needs to be given to the expert systems engineer and resourceful teacher Tom Gilb [Gilb2005].

The article is structured as follows: First, it introduces a real industry project which served as the 'playground' for a case study using Extreme Inspections. Second, it gives a short description of the method. Note that you will have to study other sources (see [Gilb2005], [Malotaux2007/2] and [Gilb2009/2]) to fully understand the method's details, and to be able to do it. Third, it describes the two main experiments of the study and their rather impressive results. The article ends with conclusions and recommendations.

Context

I successfully tried Extreme Inspections in a project one of my clients wanted to do, with me in the QA role. The organization was at CMM Level 1, the client was not very proficient in doing IT projects. During a period of almost 6 months of requirements specification I wanted to make sure that the specification documents (almost 100 detailed use cases) were of excellent quality. We specified a future system that half existed in the form of a running system, and half was planned to provide new or extra features. The existing system was at the time up and running for a good seven years with no usable requirements documentation. A system of estimated 5.000 - 8.000 function points had to be built anew because of a thorough business reengineering effort that broke the supported business processes into pieces. The goal was to arrive at a complete requirements specification that was suitable for a Request For Proposal.

Five external business analysts were doing the specification work, with one or two internal subject matter experts assigned to them for transfer of knowledge, in either direction. The subject matter experts were to maintain the specification documents for the coming years, without external help. Only one of the BAs was a true requirements specialist, two were system engineers with an architecture background, and two where IT project managers with a more technical background. However, all the BAs had at least two years of requirements specification experience, according to their resumes. While I consider myself an expert requirements engineer I chose to do 'only' QA for various reasons which are irrelevant to the argument of this article. I was never formally trained for this role but thought that my 12+ years of IT project practice and my engineering mindset should do. (It did.)

Method(s)

Briefly and limited to this article only, I will define two QA methods for written requirements documentation.

A peer expert review (short name: 'review') is an effort by a peer expert to detect defects in an entire specification, for the author, who receives hints as to where to perhaps improve the specification. In some projects and for some types of specification the author has to correct enough of the detected defects to receive a 'QA passed' for this specification. In other cases it is left to the author to decide what to do with the defects. The peer expert checks against his own knowledge (is it a good specification?), sometimes they also check against a standard set of formal rules (template completely filled out, all abbreviations explained, all references OK). As the reviewer reads the whole specification, quite a lot of knowledge is transferred from the author to the reviewer.

Note that this definition reflects common project practice of not only the above-mentioned organization. It is however quite different from the IEEE definition [IEEE], for instance.

An Extreme Inspection (short name: 'inspection') is a special form of an inspection. One main characteristic of an inspection is the check against defined rule sets, or checklists. Inspections in general check the entire specification, to detect possible defects. Extreme Inspection, however, is a rigorous specification quality control discipline. It is concerned with defect detection, defect measurement, NOT defect removal - but defect insertion prevention, process improvement, and entry/exit controls. It is based on evaluating specification conformance to specification rules. With Extreme Inspections, we measure the quality of a specification through sampling (like 1-3 pages, irrespective of the total number of pages), as this is usually sufficient to make an informed decision about the next steps concerning the specification.

Reviews, as defined above, follow a 'cure' mindset: The specification is broken, so let's fix it, and go on with the project. Inspections, as defined above, assume something quite different: The authors (due to whatever circumstances) were not able to produce a defect-free specification; let's find out why and help them with the next version, and all the specifications they will ever write. Reviewers, and conventional inspectors think 'defect removal'; 'extreme' inspectors think defect prevention. Reviewers judge if the specification is 'good' to some - often vaguely defined - standard. Inspectors judge if the specification is 'well crafted', according to clearly defined standards (the specification rules). Clearly all the quality potential is in the rules; the inspection process only helps setting it free.

Examples for such specification rules (and the ones I used for the project):

-

Unambiguous to the intended readership

-

Clear enough to test

-

Does not state optional designs as 'requirements'

-

Complete compared to all sources

An inspection - if done at the optimal checking rate of only a few pages per hour - detects significantly more defects than a review, given the same effort (due to the sampling). While it can be beneficial to point out specific defects to the author, the output of an inspection basically is only a number, i. e. the number of rule violations that the average specification page probably has. It is a statistically derived number, because inspections only sample from the entire specification. This number has to be compared to an allowable process exit condition level of 'x defects or fewer per page'. After doing so, we either give the specification writer a learning opportunity, and a chance to rewrite, and later re-inspect their work; or we exit the specification from the process as good enough.

Experiments

Experiment A

I wanted the rigor of inspections and the knowledge-transfer effect of reviews, so I first did an Experiment A to find the proper order of the two methods. To that end, I used both methods on a set of 7 documents (specifications and plans) from the project initiation phase.

The reviews were done with peer experts checking the documents for defects. The inspections were Extreme Inspections I did privately in parallel to the reviews, on the same document versions. To gain insights to the return of investment for the application of either method, and to the respective conditions of applicability, I compared

-

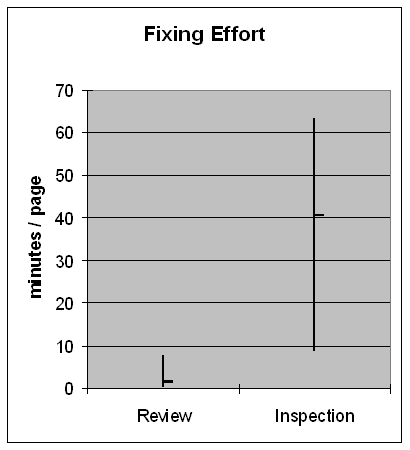

the effort spent on checking (see Figure 1),

-

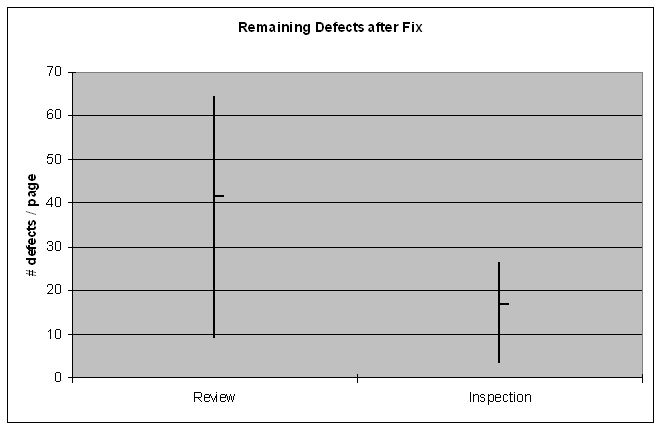

the effort spent on correction (see Figure 2),

-

the number of defects left after removal (see Figure 3), and

-

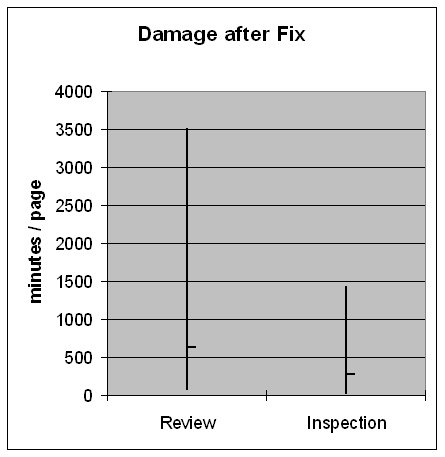

the damage still done to the project after defect removal (see Figure 4).

From the results of and experience from Experiment A (see results section), the necessary process sequence was clear: First make sure, with inspections, that the specification (or plan) is well written; and only then check with reviews if it is a 'good' specification (or plan) with respect to the real world needs. Reviews for content are much more efficient, if there is high clarity in the reviewed text, for instance. So inspections to measure a certain level of intelligibility had to be done first (see Experiment B) to make sure precious content review time was not wasted.

Experiment B

The goal of Experiment B was to find out various estimated parameters:

-

overall ROI of both inspection and review,

-

checking cost for both inspections and reviews,

-

rework cost for both inspections and reviews,

-

reduced damage for both inspections and reviews,

-

defect reduction ratio of inspections,

-

number of inspections needed to reach the quality goal level,

-

cost of the whole 2-step process,

-

effort saved by the process, and

-

what people think about the process.

For a project goal, I aimed at reducing the estimated cost for rework caused by defects in inspected documents by at least 100.000 EUR.

The task to specify the 100 use cases was given to the five analyst teams, clustered in groups of similar topics, which were the 16 most relevant business processes. I instructed the teams to first specify one or two use cases of a business process, and then immediately present them for inspection, to minimize rework in case they were heading in some wrong direction.

My plan was to let them do the work in small batches, risking little effort each time, and exploiting the learning effect of the inspection results from each batch.

Altogether I conducted 25 inspections on the 16 business processes during the period of Experiment B. The actual author, the project's test manager and I acted as checkers during inspections. Usually one of the subject matter experts (SMEs) of the respective analyst team joined. No inspection lasted longer than 60 minutes. We sampled one use case randomly among all the use cases of a business process, i. e. we inspected business processes, not use cases. We checked for the rules I mentioned above.

After a business process had exited the inspection/re-write/inspection process cycle with 8 defects per page or less, I scheduled a group review for the topic with the author of the respective specifications and all SMEs as checkers. Every SME wanted to learn about all the business processes, so all attended the sessions of three to six hours. There, the checkers walked through every use case of a business process together, diagram by diagram, sentence by sentence, word by word. They checked for 'right compared to the business rules', 'no bells and whistles', 'do we want it that way', and 'can we handle the specification'. Defects were either corrected on the spot or the author was commissioned to do it afterwards.

Results

Experiment A

The results of experiment A are depicted in Figures 1 to 4. They quite clearly answered the question of a smart order of the two methods: inspections first, reviews second. The inspections help ensuring a certain base quality (see Figure 4), upon which it is reasonable to do the reviews, which also aimed at knowledge transfer. Reviewers then can more easily concentrate on the content, or 'is it a good specification?'

Figure 1: As expected, inspecting a specification took about a third of the resources, due to the time limit used.

Figure 2: Because inspections find significantly more defects due to vastly increased thoroughness of the check, the effort needed to fix the defects uncovered by inspection was about 30 times higher. Remember that it is relatively cheap to fix defects this far upstream [Malotaux2007].

Figure 3: As expected, inspections left less defects in the specifications (about three-fifths less).

Figure 4: About half of defect removal effort downstream was needed after inspections.

Experiment B

We covered, in our sample, about 3% of the total number of pages with inspections. This means 97% of the pages remained unchecked, but of course not uncorrected. The number of defects was thereby driven down to about one-third, using an average of 2 inspections per business process. Each inspection cut the number of defects in the specification to about half. Note that this is an extraordinarily high reduction ratio, about 30% was expected. One explanation for this is the fact that use case specifications are very structured, and all specifications had the same structure. Checking is relatively easy under these circumstances.

Quality-wise, the reviews brought the number of defects down to 5-6 defects per page from 7-9 per page, but still with a reasonable ROI, as we shall see.

The monetary results of 25 inspections on 16 business processes (sample coverage ~3%), followed by 16 reviews (sample coverage 100%) are summarized in the following table.

|

|

Inspections

|

Reviews

|

Total

|

|

QA costs < - review records

|

3,800 €

|

20,500 €

|

24,300 €

|

|

Rework costs < - educated guess, RG educated from Experiment A

|

70,800 €

|

7,000 €

|

77,800 €

|

|

Reduced damage < - educated guess based on assumptions (hourly rates, number of people involved, other parameters like Experiment A)

|

352,000 €

|

92,000 €

|

442,000 €

|

|

ROI = (QA cost + Rework cost) / Reduced Damage

|

~ 5:1

|

~ 3:1

|

~ 4:1

|

The goal to reduce the damage caused by specification defects by at least 100.000 was clearly more than reached. The overall ROI was about 4:1, so despite the high number of inspections with their demanding rework, and despite the costly reviews the QA process was a very good investment.

Note, that the theory of Extreme Inspection could have been applied to the Review of Content stage, by using samples and suitable review rules for content. We chose not to because the team of SMEs wanted to make sure everybody had the same understanding of all of the content (sample coverage 100%), which came from the concerted content discussion.

The only difference between a clarity inspection and a content inspection is the set of rules used to define defects, and thus to check for defects [Gilb2006]. The main effect, after each measurement and feedback cycle is that people learn to reduce their own personal defect injection by about 50% per cycle.

Based on the results of Experiment B we derived the following set of early rules of thumb:

-

No. of inspections: To bring use case specifications from about 24 to 8 defects per page, with a sample coverage of 3%, we need to do 0,13 inspections per page.

-

Cost of inspections: To bring use case specifications from about 24 to 8 defects per page, with a sample coverage of 3%, we need to invest 266 EUR per page.

-

Savings because of inspections: To bring use case specifications from about 24 to 8 defects per page, with a sample coverage of 3%, will save us 1.111 EUR per page.

|

11 answers, 4 statements

|

Grade (1=disagree strongly .. 5 = agree strongly)

|

|

The process is helpful for improving project documents.

|

4.3

|

|

The process is helpful for saving money during the project and later, in maintenance.

|

4.2

|

|

In general, the combination of inspections and reviews makes sense and should be propagated to the whole organization.

|

4.4

|

|

The time I used for the inspection was well invested.

|

4.5

|

Table 1: The assessment of the people involved.

Conclusions

The results speak for themselves. Here are the recommendations I can give having made the experience and having gathered the data:

-

Do Extreme Inspections, it pays big time!

-

Setting numeric goals does wonders for motivating people to work according to standards.

-

Choose your standards (specification rules) wisely.

-

Don't do reviews to clean up specifications unless you also want to transfer knowledge, and are willing to spend training costs this way.

-

Give authors (not necessarily checkers) enough guidance to interpret rules correctly.

-

If possible, pay outside and offshore consultants by defect rate (by successfully exited documents, not hours spent) as an extra incentive to stick to rules.

But beware, you will need a certain effort for each of the following:

-

new skills (learn and teach the method)

-

convincing arguments, to get things rolling

-

trust, among the team members, for you don't want fights over single detected defects, or blame wars.

The method is not restricted to be used on analysis related material; it has been successfully applied to system architectures, all kinds of designs, code, and test cases. Just recently I used it on quotes in a sales context, with equally impressive results.

References

[Gilb2005] Tom Gilb, Competitive Engineering, 2005, http://www.amazon.com/Competitive-Engineering-Handbook-Requirements-Planguage/dp/0750665076/ref=sr_1_1?ie=UTF8&s=books&qid=1260107277&sr=8-1, visited 2009-12-06, 14:49 UTC+1

[Gilb2006] Tom Gilb, Rule-Based Design Reviews: Objective Design Reviews and Using Evolutionary Quantified Continuous Feedback to Judge Designs, http://www.gilb.com/tiki-download_file.php?fileId=45, visited 2009-12-06, 14:54 UTC+1

[Gilb2008] Tom Gilb, The Awful Truth about Reviews, Inspections, and Tests, http://gilb.com/tiki-download_file.php?fileId=181, visited 2009-12-07, 16:47 UTC+1

[Gilb2009] Tom & Kai Gilb, The Real QA Manifesto, http://www.result-planning.com/Real+QA+Manifesto&structure=Community+Pages, visited 2009-12-06, 14:47 UTC+1

[Gilb2009/2] Tom & Kai Gilb, The New Inspection and Review Process, http://www.result-planning.com/Inspection, visited 2009-12-06, 14:59 UTC+1

[Gotz2009] inspection results of client projects, used under non-disclosure agreement

[IEEE] IEEE Std. 1028-1997, Annex B, IEEE Standard for Software Reviews

[Jones2008] Capers Jones, Defect Removal Ability Statistics, http://gilb.com/tiki-download_file.php?fileId=234, visited 2009-12-06, 14:39 UTC+1

[Malotaux2007] Niels Malotaux, Reviews & Inspections, http://malotaux.nl/nrm/pdf/ReviewInspCourse.pdf, visited 2009-12-06, 15:05 UTC+1

[Malotaux2007/2] Niels Malotaux, Inspection Manual - Procedures, rules, checklists and other texts for use in Inspections, http://www.malotaux.nl/nrm/pdf/InspManual.pdf, visited 2009-12-06, 15:21 UTC+1

Author: Rolf Götz ([email protected])

I’m an INxP-type systems engineer who focuses on value delivered, goals reached and requirements met. My strengths are a clear sense of quality, and passion about sustainable solutions.

I’m an INxP-type systems engineer who focuses on value delivered, goals reached and requirements met. My strengths are a clear sense of quality, and passion about sustainable solutions.

If you would like to see pieces of my engineering and management work, please visit my blog (http://ClearConceptualThinking.net) or my wiki (http://PlanetProject.wikidot.com).